Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

This project demonstrates a comprehensive approach to sentiment analysis using the IMDB movie review dataset. By leveraging deep learning techniques with Keras and GloVe word embeddings, the model classifies reviews into positive and negative sentiments.

rishimule/Sentiment-Analysis-of-Movie-Reviews

Folders and files.

| Name | Name | |||

|---|---|---|---|---|

| 3 Commits | ||||

Repository files navigation

Sentiment analysis on movie review data.

This project aims to perform sentiment analysis on the IMDB movie review dataset. It utilizes deep learning techniques, particularly LSTM and Conv1D layers, to classify movie reviews into positive and negative sentiments. The model is built using Keras and GloVe embeddings for word representations.

Project Structure

- Sentiment Analysis of Movie Review using Keras.ipynb : The main notebook containing code for data preprocessing, model building, training, and evaluation.

- dataSentimental/IMDB Dataset/IMDB Dataset.csv : The dataset containing movie reviews.

- dataSentimental/glove/glove.840B.300d.pkl : Pre-trained GloVe embeddings used for word representation.

- trained_model/Sentiment_Analysis/imdb_model.h5 : The saved model after training.

Libraries Used

- TensorFlow & Keras

- scikit-learn

Key Features

- Data Preprocessing : Handling missing values, encoding labels, and data cleaning to remove unwanted characters and contractions.

- Data Visualization : Visualizing the most frequent words in positive and negative reviews using WordCloud.

- GloVe Embeddings : Using pre-trained GloVe embeddings to enhance the model's understanding of word semantics.

- Model Architecture : A combination of Conv1D, LSTM, and Dense layers with dropout for reducing overfitting.

- Model Evaluation : Evaluating model performance using metrics like accuracy and loss.

Clone the repository:

Navigate to the project directory:

Install the required libraries:

Run the Jupyter notebook Sentiment Analysis of Movie Review using Keras.ipynb to preprocess data, train the model, and evaluate its performance.

The model achieved a training accuracy of X% and a validation accuracy of Y%. The loss and accuracy plots indicate the model's learning curve over the epochs.

- Hashir Khan

This project is licensed under the MIT License - see the LICENSE file for details.

- Python 100.0%

Use Sentiment Analysis With Python to Classify Movie Reviews

Table of Contents

Removing Stop Words

Normalizing words, vectorizing text, machine learning tools, how classification works, how to use spacy for text classification, loading and preprocessing data, training your classifier, classifying reviews, connecting the pipeline, next steps with sentiment analysis and python.

Sentiment analysis is a powerful tool that allows computers to understand the underlying subjective tone of a piece of writing. This is something that humans have difficulty with, and as you might imagine, it isn’t always so easy for computers, either. But with the right tools and Python, you can use sentiment analysis to better understand the sentiment of a piece of writing.

Why would you want to do that? There are a lot of uses for sentiment analysis, such as understanding how stock traders feel about a particular company by using social media data or aggregating reviews, which you’ll get to do by the end of this tutorial.

In this tutorial, you’ll learn:

- How to use natural language processing (NLP) techniques

- How to use machine learning to determine the sentiment of text

- How to use spaCy to build an NLP pipeline that feeds into a sentiment analysis classifier

This tutorial is ideal for beginning machine learning practitioners who want a project-focused guide to building sentiment analysis pipelines with spaCy.

You should be familiar with basic machine learning techniques like binary classification as well as the concepts behind them, such as training loops, data batches, and weights and biases. If you’re unfamiliar with machine learning, then you can kickstart your journey by learning about logistic regression .

When you’re ready, you can follow along with the examples in this tutorial by downloading the source code from the link below:

Get the Source Code: Click here to get the source code you’ll use to learn about sentiment analysis with natural language processing in this tutorial.

Using Natural Language Processing to Preprocess and Clean Text Data

Any sentiment analysis workflow begins with loading data. But what do you do once the data’s been loaded? You need to process it through a natural language processing pipeline before you can do anything interesting with it.

The necessary steps include (but aren’t limited to) the following:

- Tokenizing sentences to break text down into sentences, words, or other units

- Removing stop words like “if,” “but,” “or,” and so on

- Normalizing words by condensing all forms of a word into a single form

- Vectorizing text by turning the text into a numerical representation for consumption by your classifier

All these steps serve to reduce the noise inherent in any human-readable text and improve the accuracy of your classifier’s results. There are lots of great tools to help with this, such as the Natural Language Toolkit , TextBlob , and spaCy . For this tutorial, you’ll use spaCy.

Note: spaCy is a very powerful tool with many features. For a deep dive into many of these features, check out Natural Language Processing With spaCy .

Before you go further, make sure you have spaCy and its English model installed:

The first command installs spaCy, and the second uses spaCy to download its English language model. spaCy supports a number of different languages, which are listed on the spaCy website .

Warning: This tutorial only works with spaCy 2.X and is not compatible with spaCy 3.0. For the best experience, please install the version specificed above.

Next, you’ll learn how to use spaCy to help with the preprocessing steps you learned about earlier, starting with tokenization.

Tokenization is the process of breaking down chunks of text into smaller pieces. spaCy comes with a default processing pipeline that begins with tokenization, making this process a snap. In spaCy, you can do either sentence tokenization or word tokenization:

- Word tokenization breaks text down into individual words.

- Sentence tokenization breaks text down into individual sentences.

In this tutorial, you’ll use word tokenization to separate the text into individual words. First, you’ll load the text into spaCy, which does the work of tokenization for you:

In this code, you set up some example text to tokenize, load spaCy’s English model, and then tokenize the text by passing it into the nlp constructor. This model includes a default processing pipeline that you can customize, as you’ll see later in the project section.

After that, you generate a list of tokens and print it. As you may have noticed, “word tokenization” is a slightly misleading term, as captured tokens include punctuation and other nonword strings.

Tokens are an important container type in spaCy and have a very rich set of features. In the next section, you’ll learn how to use one of those features to filter out stop words.

Stop words are words that may be important in human communication but are of little value for machines. spaCy comes with a default list of stop words that you can customize. For now, you’ll see how you can use token attributes to remove stop words:

In one line of Python code, you filter out stop words from the tokenized text using the .is_stop token attribute.

What differences do you notice between this output and the output you got after tokenizing the text? With the stop words removed, the token list is much shorter, and there’s less context to help you understand the tokens.

Normalization is a little more complex than tokenization. It entails condensing all forms of a word into a single representation of that word. For instance, “watched,” “watching,” and “watches” can all be normalized into “watch.” There are two major normalization methods:

- Lemmatization

With stemming , a word is cut off at its stem , the smallest unit of that word from which you can create the descendant words. You just saw an example of this above with “watch.” Stemming simply truncates the string using common endings, so it will miss the relationship between “feel” and “felt,” for example.

Lemmatization seeks to address this issue. This process uses a data structure that relates all forms of a word back to its simplest form, or lemma . Because lemmatization is generally more powerful than stemming, it’s the only normalization strategy offered by spaCy.

Luckily, you don’t need any additional code to do this. It happens automatically—along with a number of other activities, such as part of speech tagging and named entity recognition —when you call nlp() . You can inspect the lemma for each token by taking advantage of the .lemma_ attribute:

All you did here was generate a readable list of tokens and lemmas by iterating through the filtered list of tokens, taking advantage of the .lemma_ attribute to inspect the lemmas. This example shows only the first few tokens and lemmas. Your output will be much longer.

Note: Notice the underscore on the .lemma_ attribute. That’s not a typo. It’s a convention in spaCy that gets the human-readable version of the attribute .

The next step is to represent each token in way that a machine can understand. This is called vectorization .

Vectorization is a process that transforms a token into a vector , or a numeric array that, in the context of NLP, is unique to and represents various features of a token. Vectors are used under the hood to find word similarities, classify text, and perform other NLP operations.

This particular representation is a dense array , one in which there are defined values for every space in the array. This is in opposition to earlier methods that used sparse arrays , in which most spaces are empty.

Like the other steps, vectorization is taken care of automatically with the nlp() call. Since you already have a list of token objects, you can get the vector representation of one of the tokens like so:

Here you use the .vector attribute on the second token in the filtered_tokens list, which in this set of examples is the word Dave .

Note: If you get different results for the .vector attribute, don’t worry. This could be because you’re using a different version of the en_core_web_sm model or, potentially, of spaCy itself.

Now that you’ve learned about some of the typical text preprocessing steps in spaCy, you’ll learn how to classify text.

Using Machine Learning Classifiers to Predict Sentiment

Your text is now processed into a form understandable by your computer, so you can start to work on classifying it according to its sentiment. You’ll cover three topics that will give you a general understanding of machine learning classification of text data:

- What machine learning tools are available and how they’re used

- How classification works

- How to use spaCy for text classification

First, you’ll learn about some of the available tools for doing machine learning classification.

There are a number of tools available in Python for solving classification problems. Here are some of the more popular ones:

- scikit-learn

This list isn’t all-inclusive, but these are the more widely used machine learning frameworks available in Python. They’re large, powerful frameworks that take a lot of time to truly master and understand.

TensorFlow is developed by Google and is one of the most popular machine learning frameworks. You use it primarily to implement your own machine learning algorithms as opposed to using existing algorithms. It’s fairly low-level, which gives the user a lot of power, but it comes with a steep learning curve.

PyTorch is Facebook’s answer to TensorFlow and accomplishes many of the same goals. However, it’s built to be more familiar to Python programmers and has become a very popular framework in its own right. Because they have similar use cases, comparing TensorFlow and PyTorch is a useful exercise if you’re considering learning a framework.

scikit-learn stands in contrast to TensorFlow and PyTorch. It’s higher-level and allows you to use off-the-shelf machine learning algorithms rather than building your own. What it lacks in customizability, it more than makes up for in ease of use, allowing you to quickly train classifiers in just a few lines of code.

Luckily, spaCy provides a fairly straightforward built-in text classifier that you’ll learn about a little later. First, however, it’s important to understand the general workflow for any sort of classification problem.

Don’t worry—for this section you won’t go deep into linear algebra , vector spaces, or other esoteric concepts that power machine learning in general. Instead, you’ll get a practical introduction to the workflow and constraints common to classification problems.

Once you have your vectorized data, a basic workflow for classification looks like this:

- Split your data into training and evaluation sets.

- Select a model architecture.

- Use training data to train your model.

- Use test data to evaluate the performance of your model.

- Use your trained model on new data to generate predictions, which in this case will be a number between -1.0 and 1.0.

This list isn’t exhaustive, and there are a number of additional steps and variations that can be done in an attempt to improve accuracy. For example, machine learning practitioners often split their datasets into three sets:

The training set , as the name implies, is used to train your model. The validation set is used to help tune the hyperparameters of your model, which can lead to better performance.

Note: Hyperparameters control the training process and structure of your model and can include things like learning rate and batch size. However, which hyperparameters are available depends very much on the model you choose to use.

The test set is a dataset that incorporates a wide variety of data to accurately judge the performance of the model. Test sets are often used to compare multiple models, including the same models at different stages of training.

Now that you’ve learned the general flow of classification, it’s time to put it into action with spaCy.

You’ve already learned how spaCy does much of the text preprocessing work for you with the nlp() constructor. This is really helpful since training a classification model requires many examples to be useful.

Additionally, spaCy provides a pipeline functionality that powers much of the magic that happens under the hood when you call nlp() . The default pipeline is defined in a JSON file associated with whichever preexisting model you’re using ( en_core_web_sm for this tutorial), but you can also build one from scratch if you wish.

Note: To learn more about creating your own language processing pipelines, check out the spaCy pipeline documentation .

What does this have to do with classification? One of the built-in pipeline components that spaCy provides is called textcat (short for TextCategorizer ), which enables you to assign categories (or labels ) to your text data and use that as training data for a neural network.

This process will generate a trained model that you can then use to predict the sentiment of a given piece of text. To take advantage of this tool, you’ll need to do the following steps:

- Add the textcat component to the existing pipeline.

- Add valid labels to the textcat component.

- Load, shuffle, and split your data.

- Train the model, evaluating on each training loop.

- Use the trained model to predict the sentiment of non-training data.

- Optionally, save the trained model.

Note: You can see an implementation of these steps in the spaCy documentation examples . This is the main way to classify text in spaCy, so you’ll notice that the project code draws heavily from this example.

In the next section, you’ll learn how to put all these pieces together by building your own project: a movie review sentiment analyzer.

Building Your Own NLP Sentiment Analyzer

From the previous sections, you’ve probably noticed four major stages of building a sentiment analysis pipeline:

- Loading data

- Preprocessing

- Training the classifier

- Classifying data

For building a real-life sentiment analyzer, you’ll work through each of the steps that compose these stages. You’ll use the Large Movie Review Dataset compiled by Andrew Maas to train and test your sentiment analyzer. Once you’re ready, proceed to the next section to load your data.

If you haven’t already, download and extract the Large Movie Review Dataset. Spend a few minutes poking around, taking a look at its structure, and sampling some of the data. This will inform how you load the data. For this part, you’ll use spaCy’s textcat example as a rough guide.

You can (and should) decompose the loading stage into concrete steps to help plan your coding. Here’s an example:

- Load text and labels from the file and directory structures.

- Shuffle the data.

- Split the data into training and test sets.

- Return the two sets of data.

This process is relatively self-contained, so it should be its own function at least. In thinking about the actions that this function would perform, you may have thought of some possible parameters.

Since you’re splitting data, the ability to control the size of those splits may be useful, so split is a good parameter to include. You may also wish to limit the total amount of documents you process with a limit parameter. You can open your favorite editor and add this function signature:

With this signature, you take advantage of Python 3’s type annotations to make it absolutely clear which types your function expects and what it will return.

The parameters here allow you to define the directory in which your data is stored as well as the ratio of training data to test data. A good ratio to start with is 80 percent of the data for training data and 20 percent for test data. All of this and the following code, unless otherwise specified, should live in the same file.

Next, you’ll want to iterate through all the files in this dataset and load them into a list:

While this may seem complicated, what you’re doing is constructing the directory structure of the data, looking for and opening text files, then appending a tuple of the contents and a label dictionary to the reviews list.

The label dictionary structure is a format required by the spaCy model during the training loop, which you’ll see soon.

Note: Throughout this tutorial and throughout your Python journey, you’ll be reading and writing files . This is a foundational skill to master, so make sure to review it while you work through this tutorial.

Since you have each review open at this point, it’s a good idea to replace the <br /> HTML tags in the texts with newlines and to use .strip() to remove all leading and trailing whitespace.

For this project, you won’t remove stop words from your training data right away because it could change the meaning of a sentence or phrase, which could reduce the predictive power of your classifier. This is dependent somewhat on the stop word list that you use.

After loading the files, you want to shuffle them. This works to eliminate any possible bias from the order in which training data is loaded. Since the random module makes this easy to do in one line, you’ll also see how to split your shuffled data:

Here, you shuffle your data with a call to random.shuffle() . Then you optionally truncate and split the data using some math to convert the split to a number of items that define the split boundary. Finally, you return two parts of the reviews list using list slices.

Here’s a sample output, truncated for brevity:

To learn more about how random works, take a look at Generating Random Data in Python (Guide) .

Note: The makers of spaCy have also released a package called thinc that, among other features, includes simplified access to large datasets, including the IMDB review dataset you’re using for this project.

You can find the project on GitHub . If you investigate it, look at how they handle loading the IMDB dataset and see what overlaps exist between their code and your own.

Now that you’ve got your data loader built and have some light preprocessing done, it’s time to build the spaCy pipeline and classifier training loop.

Putting the spaCy pipeline together allows you to rapidly build and train a convolutional neural network (CNN) for classifying text data. While you’re using it here for sentiment analysis, it’s general enough to work with any kind of text classification task as long as you provide it with the training data and labels.

In this part of the project, you’ll take care of three steps:

- Modifying the base spaCy pipeline to include the textcat component

- Building a training loop to train the textcat component

- Evaluating the progress of your model training after a given number of training loops

First, you’ll add textcat to the default spaCy pipeline.

Modifying the spaCy Pipeline to Include textcat

For the first part, you’ll load the same pipeline as you did in the examples at the beginning of this tutorial, then you’ll add the textcat component if it isn’t already present. After that, you’ll add the labels that your data uses ( "pos" for positive and "neg" for negative) to textcat . Once that’s done, you’ll be ready to build the training loop:

If you’ve looked at the spaCy documentation’s textcat example already, then this should look pretty familiar. First, you load the built-in en_core_web_sm pipeline, then you check the .pipe_names attribute to see if the textcat component is already available.

If it isn’t, then you create the component (also called a pipe ) with .create_pipe() , passing in a configuration dictionary. There are a few options that you can work with described in the TextCategorizer documentation .

Finally, you add the component to the pipeline using .add_pipe() , with the last parameter signifying that this component should be added to the end of the pipeline.

Next, you’ll handle the case in which the textcat component is present and then add the labels that will serve as the categories for your text:

If the component is present in the loaded pipeline, then you just use .get_pipe() to assign it to a variable so you can work on it. For this project, all that you’ll be doing with it is adding the labels from your data so that textcat knows what to look for. You’ll do that with .add_label() .

You’ve created the pipeline and prepared the textcat component for the labels it will use for training. Now it’s time to write the training loop that will allow textcat to categorize movie reviews.

Build Your Training Loop to Train textcat

To begin the training loop, you’ll first set your pipeline to train only the textcat component, generate batches of data for it with spaCy’s minibatch() and compounding() utilities, and then go through them and update your model.

A batch is just a subset of your data. Batching your data allows you to reduce the memory footprint during training and more quickly update your hyperparameters.

Note: Compounding batch sizes is a relatively new technique and should help speed up training. You can learn more about compounding batch sizes in spaCy’s training tips .

Here’s an implementation of the training loop described above:

On lines 25 to 27, you create a list of all components in the pipeline that aren’t the textcat component. You then use the nlp.disable() context manager to disable those components for all code within the context manager’s scope.

Now you’re ready to add the code to begin training:

Here, you call nlp.begin_training() , which returns the initial optimizer function. This is what nlp.update() will use to update the weights of the underlying model.

You then use the compounding() utility to create a generator, giving you an infinite series of batch_sizes that will be used later by the minibatch() utility.

Now you’ll begin training on batches of data:

Now, for each iteration that is specified in the train_model() signature, you create an empty dictionary called loss that will be updated and used by nlp.update() . You also shuffle the training data and split it into batches of varying size with minibatch() .

For each batch, you separate the text and labels, then fed them, the empty loss dictionary, and the optimizer to nlp.update() . This runs the actual training on each example.

The dropout parameter tells nlp.update() what proportion of the training data in that batch to skip over. You do this to make it harder for the model to accidentally just memorize training data without coming up with a generalizable model.

This will take some time, so it’s important to periodically evaluate your model. You’ll do that with the data that you held back from the training set, also known as the holdout set .

Evaluating the Progress of Model Training

Since you’ll be doing a number of evaluations, with many calculations for each one, it makes sense to write a separate evaluate_model() function. In this function, you’ll run the documents in your test set against the unfinished model to get your model’s predictions and then compare them to the correct labels of that data.

Using that information, you’ll calculate the following values:

True positives are documents that your model correctly predicted as positive. For this project, this maps to the positive sentiment but generalizes in binary classification tasks to the class you’re trying to identify.

False positives are documents that your model incorrectly predicted as positive but were in fact negative.

True negatives are documents that your model correctly predicted as negative.

False negatives are documents that your model incorrectly predicted as negative but were in fact positive.

Because your model will return a score between 0 and 1 for each label, you’ll determine a positive or negative result based on that score. From the four statistics described above, you’ll calculate precision and recall, which are common measures of classification model performance:

Precision is the ratio of true positives to all items your model marked as positive (true and false positives). A precision of 1.0 means that every review that your model marked as positive belongs to the positive class.

Recall is the ratio of true positives to all reviews that are actually positive, or the number of true positives divided by the total number of true positives and false negatives.

The F-score is another popular accuracy measure, especially in the world of NLP. Explaining it could take its own article, but you’ll see the calculation in the code. As with precision and recall, the score ranges from 0 to 1, with 1 signifying the highest performance and 0 the lowest.

For evaluate_model() , you’ll need to pass in the pipeline’s tokenizer component, the textcat component, and your test dataset:

In this function, you separate reviews and their labels and then use a generator expression to tokenize each of your evaluation reviews, preparing them to be passed in to textcat . The generator expression is a nice trick recommended in the spaCy documentation that allows you to iterate through your tokenized reviews without keeping every one of them in memory.

You then use the score and true_label to determine true or false positives and true or false negatives. You then use those to calculate precision, recall, and f-score. Now all that’s left is to actually call evaluate_model() :

Here you add a print statement to help organize the output from evaluate_model() and then call it with the .use_params() context manager in order to use the model in its current state. You then call evaluate_model() and print the results.

Once the training process is complete, it’s a good idea to save the model you just trained so that you can use it again without training a new model. After your training loop, add this code to save the trained model to a directory called model_artifacts located within your working directory:

This snippet saves your model to a directory called model_artifacts so that you can make tweaks without retraining the model. Your final training function should look like this:

In this section, you learned about training a model and evaluating its performance as you train it. You then built a function that trains a classification model on your input data.

Now that you have a trained model, it’s time to test it against a real review. For the purposes of this project, you’ll hardcode a review, but you should certainly try extending this project by reading reviews from other sources, such as files or a review aggregator’s API.

The first step with this new function will be to load the previously saved model. While you could use the model in memory, loading the saved model artifact allows you to optionally skip training altogether, which you’ll see later. Here’s the test_model() signature along with the code to load your saved model:

In this code, you define test_model() , which includes the input_data parameter. You then load your previously saved model.

The IMDB data you’re working with includes an unsup directory within the training data directory that contains unlabeled reviews you can use to test your model. Here’s one such review. You should save it (or a different one of your choosing) in a TEST_REVIEW constant at the top of your file:

Next, you’ll pass this review into your model to generate a prediction, prepare it for display, and then display it to the user:

In this code, you pass your input_data into your loaded_model , which generates a prediction in the cats attribute of the parsed_text variable. You then check the scores of each sentiment and save the highest one in the prediction variable.

You then save that sentiment’s score to the score variable. This will make it easier to create human-readable output, which is the last line of this function.

You’ve now written the load_data() , train_model() , evaluate_model() , and test_model() functions. That means it’s time to put them all together and train your first model.

So far, you’ve built a number of independent functions that, taken together, will load data and train, evaluate, save, and test a sentiment analysis classifier in Python.

There’s one last step to make these functions usable, and that is to call them when the script is run. You’ll use the if __name__ == "__main__": idiom to accomplish this:

Here you load your training data with the function you wrote in the Loading and Preprocessing Data section and limit the number of reviews used to 2500 total. You then train the model using the train_model() function you wrote in Training Your Classifier and, once that’s done, you call test_model() to test the performance of your model.

Note: With this number of training examples, training can take ten minutes or longer, depending on your system. You can reduce the training set size for a shorter training time, but you’ll risk having a less accurate model.

What did your model predict? Do you agree with the result? What happens if you increase or decrease the limit parameter when loading the data? Your scores and even your predictions may vary, but here’s what you should expect your output to look like:

As your model trains, you’ll see the measures of loss, precision, and recall and the F-score for each training iteration. You should see the loss generally decrease. The precision, recall, and F-score will all bounce around, but ideally they’ll increase. Then you’ll see the test review, sentiment prediction, and the score of that prediction—the higher the better.

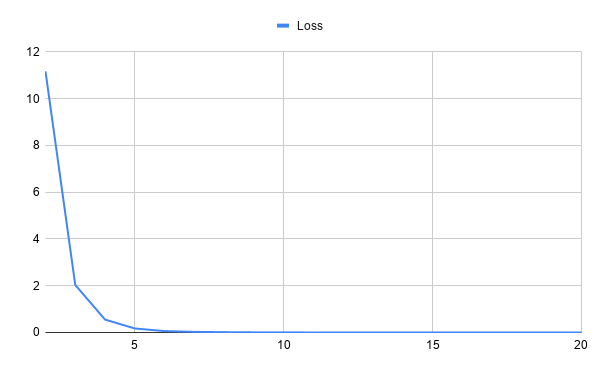

You’ve now trained your first sentiment analysis machine learning model using natural language processing techniques and neural networks with spaCy! Here are two charts showing the model’s performance across twenty training iterations. The first chart shows how the loss changes over the course of training:

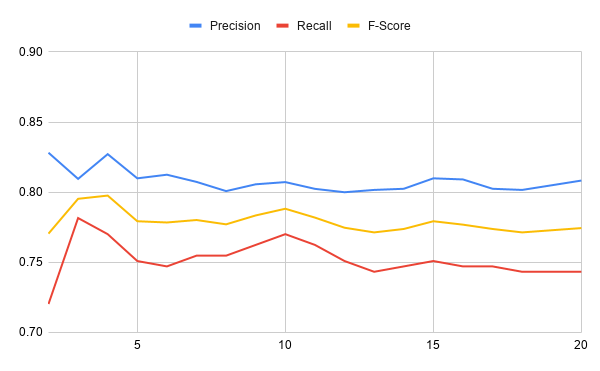

While the above graph shows loss over time, the below chart plots the precision, recall, and F-score over the same training period:

In these charts, you can see that the loss starts high but drops very quickly over training iterations. The precision, recall, and F-score are pretty stable after the first few training iterations. What could you tinker with to improve these values?

Congratulations on building your first sentiment analysis model in Python! What did you think of this project? Not only did you build a useful tool for data analysis, but you also picked up on a lot of the fundamental concepts of natural language processing and machine learning.

In this tutorial, you learned how to:

- Use natural language processing techniques

- Use a machine learning classifier to determine the sentiment of processed text data

- Build your own NLP pipeline with spaCy

You now have the basic toolkit to build more models to answer any research questions you might have. If you’d like to review what you’ve learned, then you can download and experiment with the code used in this tutorial at the link below:

What else could you do with this project? See below for some suggestions.

This is a core project that, depending on your interests, you can build a lot of functionality around. Here are a few ideas to get you started on extending this project:

The data-loading process loads every review into memory during load_data() . Can you make it more memory efficient by using generator functions instead?

Rewrite your code to remove stop words during preprocessing or data loading. How does the mode performance change? Can you incorporate this preprocessing into a pipeline component instead?

Use a tool like Click to generate an interactive command-line interface .

Deploy your model to a cloud platform like AWS and wire an API to it. This can form the basis of a web-based tool.

Explore the configuration parameters for the textcat pipeline component and experiment with different configurations.

Explore different ways to pass in new reviews to generate predictions.

Parametrize options such as where to save and load trained models, whether to skip training or train a new model, and so on.

This project uses the Large Movie Review Dataset , which is maintained by Andrew Maas . Thanks to Andrew for making this curated dataset widely available for use.

🐍 Python Tricks 💌

Get a short & sweet Python Trick delivered to your inbox every couple of days. No spam ever. Unsubscribe any time. Curated by the Real Python team.

About Kyle Stratis

Kyle is a self-taught developer working as a senior data engineer at Vizit Labs. In the past, he has founded DanqEx (formerly Nasdanq: the original meme stock exchange) and Encryptid Gaming.

Each tutorial at Real Python is created by a team of developers so that it meets our high quality standards. The team members who worked on this tutorial are:

Master Real-World Python Skills With Unlimited Access to Real Python

Join us and get access to thousands of tutorials, hands-on video courses, and a community of expert Pythonistas:

Join us and get access to thousands of tutorials, hands-on video courses, and a community of expert Pythonistas:

What Do You Think?

What’s your #1 takeaway or favorite thing you learned? How are you going to put your newfound skills to use? Leave a comment below and let us know.

Commenting Tips: The most useful comments are those written with the goal of learning from or helping out other students. Get tips for asking good questions and get answers to common questions in our support portal . Looking for a real-time conversation? Visit the Real Python Community Chat or join the next “Office Hours” Live Q&A Session . Happy Pythoning!

Keep Learning

Related Topics: intermediate data-science machine-learning

Related Tutorials:

- Natural Language Processing With spaCy in Python

- Python AI: How to Build a Neural Network & Make Predictions

Keep reading Real Python by creating a free account or signing in:

Already have an account? Sign-In

Almost there! Complete this form and click the button below to gain instant access:

Sentiment Analysis (Source Code)

🔒 No spam. We take your privacy seriously.

Sentiment analysis of movie reviews: finding most important movie aspects using driving factors

- Published: 18 July 2015

- Volume 20 , pages 3373–3379, ( 2016 )

Cite this article

- Viraj Parkhe ORCID: orcid.org/0000-0003-3567-9570 1 &

- Bhaskar Biswas 1

1860 Accesses

32 Citations

Explore all metrics

The opinion conveyed by the user towards the movie can be understood by sentiment analysis of the movie review. In the current work we focus on finding the aspects of a movie review which direct its polarity the most. This is achieved using certain driving factors, which are scores given to the various movie aspects. Generally its found that aspects with high driving factors affect the review polarity the most.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Efficient Word2Vec Vectors for Sentiment Analysis to Improve Commercial Movie Success

Effective Approach for Sentiment Analysis on Movie Reviews

Opinion Mining and Sentiment Analysis in Social Media: Challenges and Applications

Explore related subjects.

- Artificial Intelligence

Basaria ASH, Hussina B, Anantaa IGP, Zeniarjab J (2012) Opinion mining of movie review using hybrid method of support vector machine and particle swarm optimization. In: Malaysian Technical Universities conference on engineering & technology (MUCET 2012) part 4: information and communication technology

Bro J, Ehrig H (2010) Generating a context-aware sentiment lexicon for aspect-based product review mining. In: IEEE/WIC/ACM international conference on web intelligence and intelligent agent technology

Kang H, Yoo SJ, Han D (2012) Senti-lexicon and improved Naive Bayes algorithms for sentiment analysis of restaurant reviews. Expert Syst Appl 39(5):6000–6010

Large Movie Review Dataset (2015) Acquired from stanford AI lab. http://ai.stanford.edu/~amaas/data/sentiment

Maas AL, Daly RE, Pham PT, Huang D, Ng AY, Potts C (2011) Learning word vectors for sentiment analysis. In: Proceedings of the 49th annual meeting of the association for computational linguistics: human language technologies

Pang B, Lee L, Vaithyanathan S (2002) Thumbs up? Sentiment classification using machine learningtechniques. In: Proceedings of the conference on empirical methods in natural language processing (EMNLP), Philadelphia, pp 79–86

Parkhe V, Biswas B (2014) Aspect based sentiment analysis of movie reviews: finding the polarity directing aspects. In: Proceedings of international conference on soft computing and machine intelligence 2014

Sentiment Analysis (2015) Wikipedia-the free encyclopedia. http://wikipedia.org/wiki/Sentiment_analysis

SentiWordNet (2015) Lexical resource for opinion mining. http://sentiwordnet.isti.cnr.it

Singh VK, Piryani R, Uddin A, Waila P (2013) Sentiment analysis of movie reviews a new feature-based heuristic for aspect-level sentiment classification. In: Proceedings of the 2013 international muli-conference on automation, communication, computing, control and compressed sensing, Kerala-India, IEEE Xplore, pp 712–717

Stanford Part-Of-Speech Tagger (2015) Stanford natural language processing group. http://nlp.stanford.edu/software/tagger.shtml

Taboada M, Brook J, Stede M (2009) Genre-based paragraph classification for sentiment analysis. In: Proceedings of SIGDIAL 2009: the 10th annual meeting of the special interest group in discourse and dialogue, University of London, Queen Mary, pp 62–70

Thet TT, Na J-C, Khoo CSG (2010) Aspect-based sentiment analysis of movie reviews on discussion boards. J Inf Sci 36(6):823–848

Yu J, Zha Z-J, Wang M, Chua T-S (2011) Aspect ranking: identifying important product aspects from online consumer reviews. In: Proceedings of the 49th annual meeting of the association for computational linguistics, Portland, Oregon, pp 1496–1505, 19–24 June 2011

Download references

Acknowledgments

The above work is an extension of previous work published in ISCMI 2014 (Parkhe and Biswas 2014 ). Proper citations have been included for the same in the above work for transparency purposes.

Author information

Authors and affiliations.

Department of Computer Science and Engineering, IIT-(BHU), Varanasi, India

Viraj Parkhe & Bhaskar Biswas

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Viraj Parkhe .

Ethics declarations

Conflict of interest.

The authors declare that they have no conflict of interest

Informed consent

Also Informed consent was obtained from all individual participants included in the study. This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Communicated by S. Deb, T. Hanne and S. Fong.

Rights and permissions

Reprints and permissions

About this article

Parkhe, V., Biswas, B. Sentiment analysis of movie reviews: finding most important movie aspects using driving factors. Soft Comput 20 , 3373–3379 (2016). https://doi.org/10.1007/s00500-015-1779-1

Download citation

Published : 18 July 2015

Issue Date : September 2016

DOI : https://doi.org/10.1007/s00500-015-1779-1

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Sentiment analysis

- Aspect-based sentiment analysis

- Naive Bayes classifier

- Aspect importance

- Find a journal

- Publish with us

- Track your research

Sentiment Analysis of Film Review Texts Based on Sentiment Dictionary and SVM

New citation alert added.

This alert has been successfully added and will be sent to:

You will be notified whenever a record that you have chosen has been cited.

To manage your alert preferences, click on the button below.

New Citation Alert!

Please log in to your account

Information & Contributors

Bibliometrics & citations.

- Campregher Paiva I Diecke J (2024) Revisiting Weimar Film Reviewers’ Sentiments: Integrating Lexicon-Based Sentiment Analysis with Large Language Models Journal of Cultural Analytics 10.22148/001c.118497 9 :4 Online publication date: 18-Jul-2024 https://doi.org/10.22148/001c.118497

- Yang J (2024) Dictionary-based Sentiment Analysis at Subword Level 2024 IEEE International Conference on Industry 4.0, Artificial Intelligence, and Communications Technology (IAICT) 10.1109/IAICT62357.2024.10617606 (8-13) Online publication date: 4-Jul-2024 https://doi.org/10.1109/IAICT62357.2024.10617606

- Fang Q (2024) Research on the Cultivation of Practical English Talents Based on a Big Data-Driven Model and Sentiment Dictionary Analysis IEEE Access 10.1109/ACCESS.2024.3410281 12 (80922-80929) Online publication date: 2024 https://doi.org/10.1109/ACCESS.2024.3410281

- Show More Cited By

Index Terms

Computing methodologies

Artificial intelligence

Natural language processing

Information extraction

Recommendations

Joint sentiment/topic model for sentiment analysis.

Sentiment analysis or opinion mining aims to use automated tools to detect subjective information such as opinions, attitudes, and feelings expressed in text. This paper proposes a novel probabilistic modeling framework based on Latent Dirichlet ...

Sentiment analysis of Chinese micro-blog text based on extended sentiment dictionary

Micro-blog texts contain complex and abundant sentiments which reflect users standpoints or opinions on a given topic. However, the existing classification method of sentiments cannot facilitate micro-blog topic monitoring. To solve this problem, this ...

Text sentiment classification based on the automatic expansion of sentiment dictionary

The sentiment dictionary-based text sentiment classification method is one of the main methods in the field of sentiment analysis, and the completeness of the sentiment dictionary is one of the key factors of the method. With the constant appearance of ...

Information

Published in.

In-Cooperation

- Xi'an Jiaotong-Liverpool University: Xi'an Jiaotong-Liverpool University

- University of Texas-Dallas: University of Texas-Dallas

Association for Computing Machinery

New York, NY, United States

Publication History

Permissions, check for updates, author tags.

- Film Review Texts

- Sentiment Analysis

- Sentiment Dictionary

- Research-article

- Refereed limited

Contributors

Other metrics, bibliometrics, article metrics.

- 19 Total Citations View Citations

- 505 Total Downloads

- Downloads (Last 12 months) 71

- Downloads (Last 6 weeks) 4

- Mallol-Ragolta A Schuller B (2024) Coupling Sentiment and Arousal Analysis Towards an Affective Dialogue Manager IEEE Access 10.1109/ACCESS.2024.3361750 12 (20654-20662) Online publication date: 2024 https://doi.org/10.1109/ACCESS.2024.3361750

- Yu Y Chen J Mehraliyev F Hu S Wang S Liu J (2024) Exploring the diversity of emotion in hospitality and tourism from big data: a novel sentiment dictionary International Journal of Contemporary Hospitality Management 10.1108/IJCHM-08-2023-1234 Online publication date: 2-Jul-2024 https://doi.org/10.1108/IJCHM-08-2023-1234

- Kim C Paano C Chickanayakanahalli A Xiao Y Diamond S (2024) ContentRank: Towards a Scoring and Ranking System for Screen Media Products Using Critical Reception Data Culture and Computing 10.1007/978-3-031-61147-6_5 (55-73) Online publication date: 1-Jun-2024 https://doi.org/10.1007/978-3-031-61147-6_5

- Yang Q Zhang J (2023) Sentiment Distribution of Topic Discussion in Online English Learning International Journal of Information Technologies and Systems Approach 10.4018/IJITSA.325791 16 :2 (1-14) Online publication date: 11-Jul-2023 https://dl.acm.org/doi/10.4018/IJITSA.325791

- Li F Huo Y Wang L (2023) Research on Chinese Emotion Classification using BERT-RCNN-ATT WSEAS TRANSACTIONS ON COMMUNICATIONS 10.37394/23204.2023.22.2 22 (17-31) Online publication date: 17-Mar-2023 https://doi.org/10.37394/23204.2023.22.2

- Huang X Zhou Y Du Y (2023) A Novel Bi-Dual Inference Approach for Detecting Six-Element Emotions Applied Sciences 10.3390/app13179957 13 :17 (9957) Online publication date: 3-Sep-2023 https://doi.org/10.3390/app13179957

- Liu J (2023) Sentiment analysis of domestic movie reviews based on a discrete regression model Applied Mathematics and Nonlinear Sciences 10.2478/amns.2023.2.00036 9 :1 Online publication date: 12-Jul-2023 https://doi.org/10.2478/amns.2023.2.00036

View Options

Login options.

Check if you have access through your login credentials or your institution to get full access on this article.

Full Access

View options.

View or Download as a PDF file.

View online with eReader .

Share this Publication link

Copying failed.

Share on social media

Affiliations, export citations.

- Please download or close your previous search result export first before starting a new bulk export. Preview is not available. By clicking download, a status dialog will open to start the export process. The process may take a few minutes but once it finishes a file will be downloadable from your browser. You may continue to browse the DL while the export process is in progress. Download

- Download citation

- Copy citation

We are preparing your search results for download ...

We will inform you here when the file is ready.

Your file of search results citations is now ready.

Your search export query has expired. Please try again.

IEEE Account

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

IMAGES

VIDEO

COMMENTS

Movies have been important in our lives for many years. Movies provide entertainment, inspire, educate, and offer an escape from reality. Movie reviews help us choose better movies, but reading them all can be time-consuming and overwhelming. To make it easier, sentiment analysis can classify movie reviews into positive and negative categories. Opinion mining (OP), called sentiment analysis ...

Maybe you're interested in knowing whether movie reviews are positive or negative, companies use sentiment analysis in a variety of settings, particularly for marketing purposes. Uses include social media monitoring, brand monitoring, customer feedback, customer service and market research ("Sentiment Analysis"). This post will cover:

Sentiment analysis can successfully rate the movie and deduce the emotion of the review almost accurately. After careful analysis of results obtained from both classifiers, I have concluded that when training set is used from the reviews itself, the accuracy of Naïve Bayes classifier (0.97) is more than that of decision tree classifier (0.65).

Explore and run machine learning code with Kaggle Notebooks | Using data from IMDB Dataset of 50K Movie Reviews. Kaggle uses cookies from Google to deliver and enhance the quality of its services and to analyze traffic. Learn more. OK, Got it. Something went wrong and this page crashed!

[17] S. M. Qaisar, "Sentiment Analysis of IMDb Movie Reviews Using Long Short-Term Memory," 2020 2nd International Conference on Computer and Information Sciences (ICCIS), Sakaka, Saudi

The present paper presents a sentiment analysis on movie reviews, illustrating the datasets, approach, results, and limitations for the work done by the researchers. 2 Related Work. With the growing trend in this domain, we studied the work done by the researchers from 2017 to 2021. The relevant papers were extracted from various online ...

This project demonstrates a comprehensive approach to sentiment analysis using the IMDB movie review dataset. By leveraging deep learning techniques with Keras and GloVe word embeddings, the model classifies reviews into positive and negative sentiments. - rishimule/Sentiment-Analysis-of-Movie-Reviews

To improve the accuracy of sentiment analysis in movie reviews, it was integrated with the Albert-BiLSTM-Attention model. The results of six rounds of comparative experiments show that the method suggested in this paper has improved average precision, average recall, and average F1 score in the sentiment classification of this dataset. ...

The labeled data set consists of 50,000 IMDB movie reviews, specially selected for sentiment analysis. The sentiment of reviews is binary, meaning the IMDB rating <5 results in a sentiment score of 0, and rating 7 have a sentiment score of 1. No individual movie has more than 30 reviews. The 25,000 review labeled training set does not include ...

The report utilizes a methodology to conduct the analysis of the sentiment analysis of IMDb reviews, as shown in Fig. 1. First, the report illustrates and feeds the data into the data cleaning and preprocess. Next, the report removes the stop words and some irrelevant words from the original data; then, the vectorization techniques are applied ...

Sentiment analysis is a natural language processing problem where text is understood, and the underlying intent is predicted. In this post, you will discover how you can predict the sentiment of movie reviews as either positive or negative in Python using the Keras deep learning library. After reading this post, you will know: About the IMDB sentiment analysis problem for…

This process will generate a trained model that you can then use to predict the sentiment of a given piece of text. To take advantage of this tool, you'll need to do the following steps: Add the textcat component to the existing pipeline. Add valid labels to the textcat component. Load, shuffle, and split your data.

Sentiment analysis is one of the main challenges in natural language processing. Recently, deep learning applications have shown impressive results across differ-ent NLP tasks. In this work, I explore performance of different deep learning architectures for semantic analysis of movie reviews, using Stanford Sentiment Treebank as the main dataset.

BERT has been primarily used in [9] for sentiment analysis, but. the accuracy is not satisfactory. In this paper, we fine-tune BERT for sentiment analysis on movie reviews, comparing. both binary and fine-grained classifications, and achieve, with our best method, accuracy. that surpasses state-of-the art (SOTA) models.

Sentiment Analysis. Large Movie Review Dataset. This is a dataset for binary sentiment classification containing substantially more data than previous benchmark datasets. We provide a set of 25,000 highly polar movie reviews for training, and 25,000 for testing. There is additional unlabeled data for use as well.

In this article, a method for automatic sentiment analysis of movie reviews is proposed, implemented and evaluated. In contrast to most studies that focus on determining only sentiment orientation (positive versus negative), the proposed method performs fine-grained analysis to determine both the sentiment orientation and sentiment strength of the reviewer towards various aspects of a movie.

The opinion conveyed by the user towards the movie can be understood by sentiment analysis of the movie review. In the current work we focus on finding the aspects of a movie review which direct its polarity the most. This is achieved using certain driving factors, which are scores given to the various movie aspects. Generally its found that aspects with high driving factors affect the review ...

PDF | On Dec 15, 2017, Palak Baid and others published Sentiment Analysis of Movie Reviews using Machine Learning Techniques | Find, read and cite all the research you need on ResearchGate

Movie reviews have been used before for sentiment analysis. We expect that comments express the same range of opinions and sub-jectivity as the movie reviews. The main difference between the movie reviews and Digg comments is length of the text. Typical comment is only one or couple of sentences short, and is usually narrowly focused on a ...

The sentiment analysis of the film review text is to extract and analyze the hidden sentiment information in the text data, thereby helping the network personnel such as the media platform to analyze the audience's preference for the film. Based on this, this paper proposes a film review text sentiment analysis method based on SVM ...

Sentiment analysis is most widely used in NLP to extract and observe opinions of an individual or a group of people based on their own words or on their perspective or views on certain incidents, which could be based on a social media post, a review on a movie or even a feedback for a product purchased online. This technique can directly be used to predict the emotions conveyed by the text ...

Classify the sentiment of sentences from the Rotten Tomatoes dataset. Kaggle uses cookies from Google to deliver and enhance the quality of its services and to analyze traffic. Learn more. OK, Got it. Something went wrong and this page crashed! If the issue persists, it's likely a problem on our side.