Machine Learning

- Machine Learning Tutorial

- Machine Learning Applications

- Life cycle of Machine Learning

- Install Anaconda & Python

- AI vs Machine Learning

- How to Get Datasets

- Data Preprocessing

- Supervised Machine Learning

- Unsupervised Machine Learning

- Supervised vs Unsupervised Learning

Supervised Learning

- Regression Analysis

- Linear Regression

- Simple Linear Regression

- Multiple Linear Regression

- Backward Elimination

- Polynomial Regression

Classification

- Classification Algorithm

- Logistic Regression

- K-NN Algorithm

- Support Vector Machine Algorithm

- Na�ve Bayes Classifier

Miscellaneous

- Classification vs Regression

- Linear Regression vs Logistic Regression

- Decision Tree Classification Algorithm

- Random Forest Algorithm

- Clustering in Machine Learning

- Hierarchical Clustering in Machine Learning

- K-Means Clustering Algorithm

- Apriori Algorithm in Machine Learning

- Association Rule Learning

- Confusion Matrix

- Cross-Validation

- Data Science vs Machine Learning

- Machine Learning vs Deep Learning

- Dimensionality Reduction Technique

- Machine Learning Algorithms

- Overfitting & Underfitting

- Principal Component Analysis

- What is P-Value

- Regularization in Machine Learning

- Examples of Machine Learning

- Semi-Supervised Learning

- Essential Mathematics for Machine Learning

- Overfitting in Machine Learning

- Types of Encoding Techniques

- Feature Selection Techniques in Machine Learning

- Bias and Variance in Machine Learning

- Machine Learning Tools

- Prerequisites for Machine Learning

- Gradient Descent in Machine Learning

- Machine Learning Experts Salary in India

- Machine Learning Models

- Machine Learning Books

- Linear Algebra for Machine learning

- Types of Machine Learning

- Feature Engineering for Machine Learning

- Top 10 Machine Learning Courses in 2021

- Epoch in Machine Learning

- Machine Learning with Anomaly Detection

- What is Epoch

- Cost Function in Machine Learning

- Bayes Theorem in Machine learning

- Perceptron in Machine Learning

- Entropy in Machine Learning

- Issues in Machine Learning

- Precision and Recall in Machine Learning

- Genetic Algorithm in Machine Learning

- Normalization in Machine Learning

- Adversarial Machine Learning

- Basic Concepts in Machine Learning

- Machine Learning Techniques

- Demystifying Machine Learning

- Challenges of Machine Learning

- Model Parameter vs Hyperparameter

- Hyperparameters in Machine Learning

- Importance of Machine Learning

- Machine Learning and Cloud Computing

- Anti-Money Laundering using Machine Learning

- Data Science Vs. Machine Learning Vs. Big Data

- Popular Machine Learning Platforms

- Deep learning vs. Machine learning vs. Artificial Intelligence

- Machine Learning Application in Defense/Military

- Machine Learning Applications in Media

- How can Machine Learning be used with Blockchain

- Prerequisites to Learn Artificial Intelligence and Machine Learning

- List of Machine Learning Companies in India

- Mathematics Courses for Machine Learning

- Probability and Statistics Books for Machine Learning

- Risks of Machine Learning

- Best Laptops for Machine Learning

- Machine Learning in Finance

- Lead Generation using Machine Learning

- Machine Learning and Data Science Certification

- What is Big Data and Machine Learning

- How to Save a Machine Learning Model

- Machine Learning Model with Teachable Machine

- Data Structure for Machine Learning

- Hypothesis in Machine Learning

- Gaussian Discriminant Analysis

- How Machine Learning is used by Famous Companies

- Introduction to Transfer Learning in ML

- LDA in Machine Learning

- Stacking in Machine Learning

- CNB Algorithm

- Deploy a Machine Learning Model using Streamlit Library

- Different Types of Methods for Clustering Algorithms in ML

- EM Algorithm in Machine Learning

- Machine Learning Pipeline

- Exploitation and Exploration in Machine Learning

- Machine Learning for Trading

- Data Augmentation: A Tactic to Improve the Performance of ML

- Difference Between Coding in Data Science and Machine Learning

- Data Labelling in Machine Learning

- Impact of Deep Learning on Personalization

- Major Business Applications of Convolutional Neural Network

- Mini Batch K-means clustering algorithm

- What is Multilevel Modelling

- GBM in Machine Learning

- Back Propagation through time - RNN

- Data Preparation in Machine Learning

- Predictive Maintenance Using Machine Learning

- NLP Analysis of Restaurant Reviews

- What are LSTM Networks

- Performance Metrics in Machine Learning

- Optimization using Hopfield Network

- Data Leakage in Machine Learning

- Generative Adversarial Network

- Machine Learning for Data Management

- Tensor Processing Units

- Train and Test datasets in Machine Learning

- How to Start with Machine Learning

- AUC-ROC Curve in Machine Learning

- Targeted Advertising using Machine Learning

- Top 10 Machine Learning Projects for Beginners using Python

- What is Human-in-the-Loop Machine Learning

- What is MLOps

- K-Medoids clustering-Theoretical Explanation

- Machine Learning Or Software Development: Which is Better

- How does Machine Learning Work

- How to learn Machine Learning from Scratch

- Is Machine Learning Hard

- Face Recognition in Machine Learning

- Product Recommendation Machine Learning

- Designing a Learning System in Machine Learning

- Recommendation System - Machine Learning

- Customer Segmentation Using Machine Learning

- Detecting Phishing Websites using Machine Learning

- Hidden Markov Model in Machine Learning

- Sales Prediction Using Machine Learning

- Crop Yield Prediction Using Machine Learning

- Data Visualization in Machine Learning

- ELM in Machine Learning

- Probabilistic Model in Machine Learning

- Survival Analysis Using Machine Learning

- Traffic Prediction Using Machine Learning

- t-SNE in Machine Learning

- BERT Language Model

- Federated Learning in Machine Learning

- Deep Parametric Continuous Convolutional Neural Network

- Depth-wise Separable Convolutional Neural Networks

- Need for Data Structures and Algorithms for Deep Learning and Machine Learning

- Geometric Model in Machine Learning

- Machine Learning Prediction

- Scalable Machine Learning

- Credit Score Prediction using Machine Learning

- Extrapolation in Machine Learning

- Image Forgery Detection Using Machine Learning

- Insurance Fraud Detection -Machine Learning

- NPS in Machine Learning

- Sequence Classification- Machine Learning

- EfficientNet: A Breakthrough in Machine Learning Model Architecture

- focl algorithm in Machine Learning

- Gini Index in Machine Learning

- Rainfall Prediction using ML

- Major Kernel Functions in Support Vector Machine

- Bagging Machine Learning

- BERT Applications

- Xtreme: MultiLingual Neural Network

- History of Machine Learning

- Multimodal Transformer Models

- Pruning in Machine Learning

- ResNet: Residual Network

- Gold Price Prediction using Machine Learning

- Dog Breed Classification using Transfer Learning

- Cataract Detection Using Machine Learning

- Placement Prediction Using Machine Learning

- Stock Market prediction using Machine Learning

- How to Check the Accuracy of your Machine Learning Model

- Interpretability and Explainability: Transformer Models

- Pattern Recognition in Machine Learning

- Zillow Home Value (Zestimate) Prediction in ML

- Fake News Detection Using Machine Learning

- Genetic Programming VS Machine Learning

- IPL Prediction Using Machine Learning

- Document Classification Using Machine Learning

- Heart Disease Prediction Using Machine Learning

- OCR with Machine Learning

- Air Pollution Prediction Using Machine Learning

- Customer Churn Prediction Using Machine Learning

- Earthquake Prediction Using Machine Learning

- Factor Analysis in Machine Learning

- Locally Weighted Linear Regression

- Machine Learning in Restaurant Industry

- Machine Learning Methods for Data-Driven Turbulence Modeling

- Predicting Student Dropout Using Machine Learning

- Image Processing Using Machine Learning

- Machine Learning in Banking

- Machine Learning in Education

- Machine Learning in Healthcare

- Machine Learning in Robotics

- Cloud Computing for Machine Learning and Cognitive Applications

- Credit Card Approval Using Machine Learning

- Liver Disease Prediction Using Machine Learning

- Majority Voting Algorithm in Machine Learning

- Data Augmentation in Machine Learning

- Decision Tree Classifier in Machine Learning

- Machine Learning in Design

- Digit Recognition Using Machine Learning

- Electricity Consumption Prediction Using Machine Learning

- Data Analytics vs. Machine Learning

- Injury Prediction in Competitive Runners Using Machine Learning

- Protein Folding Using Machine Learning

- Sentiment Analysis Using Machine Learning

- Network Intrusion Detection System Using Machine Learning

- Titanic- Machine Learning From Disaster

- Adenovirus Disease Prediction for Child Healthcare Using Machine Learning

- RNN for Sequence Labelling

- CatBoost in Machine Learning

- Cloud Computing Future Trends

- Histogram of Oriented Gradients (HOG)

- Implementation of neural network from scratch using NumPy

- Introduction to SIFT( Scale Invariant Feature Transform)

- Introduction to SURF (Speeded-Up Robust Features)

- Kubernetes - load balancing service

- Kubernetes Resource Model (KRM) and How to Make Use of YAML

- Are Robots Self-Learning

- Variational Autoencoders

- What are the Security and Privacy Risks of VR and AR

- What is a Large Language Model (LLM)

- Privacy-preserving Machine Learning

- Continual Learning in Machine Learning

- Quantum Machine Learning (QML)

- Split Single Column into Multiple Columns in PySpark DataFrame

- Why should we use AutoML

- Evaluation Metrics for Object Detection and Recognition

- Mean Intersection over Union (mIoU) for image segmentation

- YOLOV5-Object-Tracker-In-Videos

- Predicting Salaries with Machine Learning

- Fine-tuning Large Language Models

- AutoML Workflow

- Build Chatbot Webapp with LangChain

- Building a Machine Learning Classification Model with PyCaret

- Continuous Bag of Words (CBOW) in NLP

- Deploying Scrapy Spider on ScrapingHub

- Dynamic Pricing Using Machine Learning

- How to Improve Neural Networks by Using Complex Numbers

- Introduction to Bayesian Deep Learning

- LiDAR: Light Detection and Ranging for 3D Reconstruction

- Meta-Learning in Machine Learning

- Object Recognition in Medical Imaging

- Region-level Evaluation Metrics for Image Segmentation

- Sarcasm Detection Using Neural Networks

- SARSA Reinforcement Learning

- Single Shot MultiBox Detector (SSD) using Neural Networking Approach

- Stepwise Predictive Analysis in Machine Learning

- Vision Transformers vs. Convolutional Neural Networks

- V-Net in Image Segmentation

- Forest Cover Type Prediction Using Machine Learning

- Ada Boost algorithm in Machine Learning

- Continuous Value Prediction

- Bayesian Regression

- Least Angle Regression

- Linear Models

- DNN Machine Learning

- Why do we need to learn Machine Learning

- Roles in Machine Learning

- Clustering Performance Evaluation

- Spectral Co-clustering

- 7 Best R Packages for Machine Learning

- Calculate Kurtosis

- Machine Learning for Data Analysis

- What are the benefits of 5G Technology for the Internet of Things

- What is the Role of Machine Learning in IoT

- Human Activity Recognition Using Machine Learning

- Components of GIS

- Attention Mechanism

- Backpropagation- Algorithm

- VGGNet-16 Architecture

- Independent Component Analysis

- Nonnegative Matrix Factorization

- Sparse Inverse Covariance

- Accuracy, Precision, Recall or F1

- L1 and L2 Regularization

- Maximum Likelihood Estimation

- Kernel Principal Component Analysis (KPCA)

- Latent Semantic Analysis

- Overview of outlier detection methods

- Robust Covariance Estimation

- Spectral Bi-Clustering

- Drift in Machine Learning

- Credit Card Fraud Detection Using Machine Learning

- KL-Divergence

- Transformers Architecture

- Novelty Detection with Local Outlier Factor

- Novelty Detection

- Introduction to Bayesian Linear Regression

- Firefly Algorithm

- Keras: Attention and Seq2Seq

- A Guide Towards a Successful Machine Learning Project

- ACF and PCF

- Bayesian Hyperparameter Optimization for Machine Learning

- Random Forest Hyperparameter tuning in python

- Simulated Annealing

- Top Benefits of Machine Learning in FinTech

- Weight Initialisation

- Density Estimation

- Overlay Network

- Micro, Macro Weighted Averages of F1 Score

- Assumptions of Linear Regression

- Evaluation Metrics for Clustering Algorithms

- Frog Leap Algorithm

- Isolation Forest

- McNemar Test

- Stochastic Optimization

- Geomagnetic Field Using Machine Learning

- Image Generation Using Machine Learning

- Confidence Intervals

- Facebook Prophet

- Understanding Optimization Algorithms in Machine Learning

- What Are Probabilistic Models in Machine Learning

- How to choose the best Linear Regression model

- How to Remove Non-Stationarity From Time Series

- AutoEncoders

- Cat Classification Using Machine Learning

- AIC and BIC

- Inception Model

- Architecture of Machine Learning

- Business Intelligence Vs Machine Learning

- Guide to Cluster Analysis: Applications, Best Practices

- Linear Regression using Gradient Descent

- Text Clustering with K-Means

- The Significance and Applications of Covariance Matrix

- Stationarity Tests in Time Series

- Graph Machine Learning

- Introduction to XGBoost Algorithm in Machine Learning

- Bahdanau Attention

- Greedy Layer Wise Pre-Training

- OneVsRestClassifier

- Best Program for Machine Learning

- Deep Boltzmann machines (DBMs) in machine learning

- Find Patterns in Data Using Machine Learning

- Generalized Linear Models

- How to Implement Gradient Descent Optimization from Scratch

- Interpreting Correlation Coefficients

- Image Captioning Using Machine Learning

- fit() vs predict() vs fit_predict() in Python scikit-learn

- CNN Filters

- Shannon Entropy

- Time Series -Exponential Smoothing

- AUC ROC Curve in Machine Learning

- Vector Norms in Machine Learning

- Swarm Intelligence

- L1 and L2 Regularization Methods in Machine Learning

- ML Approaches for Time Series

- MSE and Bias-Variance Decomposition

- Simple Exponential Smoothing

- How to Optimise Machine Learning Model

- Multiclass logistic regression from scratch

- Lightbm Multilabel Classification

- Monte Carlo Methods

- What is Inverse Reinforcement learning

- Content-Based Recommender System

- Context-Awareness Recommender System

- Predicting Flights Using Machine Learning

- NTLK Corpus

- Traditional Feature Engineering Models

- Concept Drift and Model Decay in Machine Learning

- Hierarchical Reinforcement Learning

- What is Feature Scaling and Why is it Important in Machine Learning

- Difference between Statistical Model and Machine Learning

- Introduction to Ranking Algorithms in Machine Learning

- Multicollinearity: Causes, Effects and Detection

- Bag of N-Grams Model

- TF-IDF Model

Related Tutorials

- Tensorflow Tutorial

- PyTorch Tutorial

- Data Science Tutorial

- AI Tutorial

- NLP Tutorial

- Reinforcement Learning

Interview Questions

- Machine learning Interview

Latest Courses

We provides tutorials and interview questions of all technology like java tutorial, android, java frameworks

Contact info

G-13, 2nd Floor, Sec-3, Noida, UP, 201301, India

[email protected] .

Latest Post

PRIVACY POLICY

Online Compiler

- Python for Data Science

- Data Analysis

- Machine Learning

- Deep Learning

- Deep Learning Interview Questions

- ML Projects

- ML Interview Questions

Understanding Hypothesis Testing

Hypothesis testing is a fundamental statistical method employed in various fields, including data science , machine learning , and statistics , to make informed decisions based on empirical evidence. It involves formulating assumptions about population parameters using sample statistics and rigorously evaluating these assumptions against collected data. At its core, hypothesis testing is a systematic approach that allows researchers to assess the validity of a statistical claim about an unknown population parameter. This article sheds light on the significance of hypothesis testing and the critical steps involved in the process.

Table of Content

What is Hypothesis Testing?

Why do we use hypothesis testing, one-tailed and two-tailed test, what are type 1 and type 2 errors in hypothesis testing, how does hypothesis testing work, real life examples of hypothesis testing, limitations of hypothesis testing.

A hypothesis is an assumption or idea, specifically a statistical claim about an unknown population parameter. For example, a judge assumes a person is innocent and verifies this by reviewing evidence and hearing testimony before reaching a verdict.

Hypothesis testing is a statistical method that is used to make a statistical decision using experimental data. Hypothesis testing is basically an assumption that we make about a population parameter. It evaluates two mutually exclusive statements about a population to determine which statement is best supported by the sample data.

To test the validity of the claim or assumption about the population parameter:

- A sample is drawn from the population and analyzed.

- The results of the analysis are used to decide whether the claim is true or not.

Example: You say an average height in the class is 30 or a boy is taller than a girl. All of these is an assumption that we are assuming, and we need some statistical way to prove these. We need some mathematical conclusion whatever we are assuming is true.

This structured approach to hypothesis testing in data science , hypothesis testing in machine learning , and hypothesis testing in statistics is crucial for making informed decisions based on data.

- By employing hypothesis testing in data analytics and other fields, practitioners can rigorously evaluate their assumptions and derive meaningful insights from their analyses.

- Understanding hypothesis generation and testing is also essential for effectively implementing statistical hypothesis testing in various applications.

Defining Hypotheses

- Null hypothesis (H 0 ): In statistics, the null hypothesis is a general statement or default position that there is no relationship between two measured cases or no relationship among groups. In other words, it is a basic assumption or made based on the problem knowledge. Example : A company’s mean production is 50 units/per da H 0 : [Tex]\mu [/Tex] = 50.

- Alternative hypothesis (H 1 ): The alternative hypothesis is the hypothesis used in hypothesis testing that is contrary to the null hypothesis. Example: A company’s production is not equal to 50 units/per day i.e. H 1 : [Tex]\mu [/Tex] [Tex]\ne [/Tex] 50.

Key Terms of Hypothesis Testing

- Level of significance : It refers to the degree of significance in which we accept or reject the null hypothesis. 100% accuracy is not possible for accepting a hypothesis, so we, therefore, select a level of significance that is usually 5%. This is normally denoted with [Tex]\alpha[/Tex] and generally, it is 0.05 or 5%, which means your output should be 95% confident to give a similar kind of result in each sample.

- P-value: The P value , or calculated probability, is the probability of finding the observed/extreme results when the null hypothesis(H0) of a study-given problem is true. If your P-value is less than the chosen significance level then you reject the null hypothesis i.e. accept that your sample claims to support the alternative hypothesis.

- Test Statistic: The test statistic is a numerical value calculated from sample data during a hypothesis test, used to determine whether to reject the null hypothesis. It is compared to a critical value or p-value to make decisions about the statistical significance of the observed results.

- Critical value : The critical value in statistics is a threshold or cutoff point used to determine whether to reject the null hypothesis in a hypothesis test.

- Degrees of freedom: Degrees of freedom are associated with the variability or freedom one has in estimating a parameter. The degrees of freedom are related to the sample size and determine the shape.

Hypothesis testing is an important procedure in statistics. Hypothesis testing evaluates two mutually exclusive population statements to determine which statement is most supported by sample data. When we say that the findings are statistically significant, thanks to hypothesis testing.

Understanding hypothesis testing in statistics is essential for data scientists and machine learning practitioners, as it provides a structured framework for statistical hypothesis generation and testing. This methodology can also be applied in hypothesis testing in Python , enabling data analysts to perform robust statistical analyses efficiently. By employing techniques such as multiple hypothesis testing in machine learning , researchers can ensure more reliable results and avoid potential pitfalls associated with drawing conclusions from statistical tests.

One tailed test focuses on one direction, either greater than or less than a specified value. We use a one-tailed test when there is a clear directional expectation based on prior knowledge or theory. The critical region is located on only one side of the distribution curve. If the sample falls into this critical region, the null hypothesis is rejected in favor of the alternative hypothesis.

One-Tailed Test

There are two types of one-tailed test:

- Left-Tailed (Left-Sided) Test: The alternative hypothesis asserts that the true parameter value is less than the null hypothesis. Example: H 0 : [Tex]\mu \geq 50 [/Tex] and H 1 : [Tex]\mu < 50 [/Tex]

- Right-Tailed (Right-Sided) Test : The alternative hypothesis asserts that the true parameter value is greater than the null hypothesis. Example: H 0 : [Tex]\mu \leq50 [/Tex] and H 1 : [Tex]\mu > 50 [/Tex]

Two-Tailed Test

A two-tailed test considers both directions, greater than and less than a specified value.We use a two-tailed test when there is no specific directional expectation, and want to detect any significant difference.

Example: H 0 : [Tex]\mu = [/Tex] 50 and H 1 : [Tex]\mu \neq 50 [/Tex]

To delve deeper into differences into both types of test: Refer to link

In hypothesis testing, Type I and Type II errors are two possible errors that researchers can make when drawing conclusions about a population based on a sample of data. These errors are associated with the decisions made regarding the null hypothesis and the alternative hypothesis.

- Type I error: When we reject the null hypothesis, although that hypothesis was true. Type I error is denoted by alpha( [Tex]\alpha [/Tex] ).

- Type II errors : When we accept the null hypothesis, but it is false. Type II errors are denoted by beta( [Tex]\beta [/Tex] ).

Step 1: Define Null and Alternative Hypothesis

State the null hypothesis ( [Tex]H_0 [/Tex] ), representing no effect, and the alternative hypothesis ( [Tex]H_1 [/Tex] ), suggesting an effect or difference.

We first identify the problem about which we want to make an assumption keeping in mind that our assumption should be contradictory to one another, assuming Normally distributed data.

Step 2 – Choose significance level

Select a significance level ( [Tex]\alpha [/Tex] ), typically 0.05, to determine the threshold for rejecting the null hypothesis. It provides validity to our hypothesis test, ensuring that we have sufficient data to back up our claims. Usually, we determine our significance level beforehand of the test. The p-value is the criterion used to calculate our significance value.

Step 3 – Collect and Analyze data.

Gather relevant data through observation or experimentation. Analyze the data using appropriate statistical methods to obtain a test statistic.

Step 4-Calculate Test Statistic

The data for the tests are evaluated in this step we look for various scores based on the characteristics of data. The choice of the test statistic depends on the type of hypothesis test being conducted.

There are various hypothesis tests, each appropriate for various goal to calculate our test. This could be a Z-test , Chi-square , T-test , and so on.

- Z-test : If population means and standard deviations are known. Z-statistic is commonly used.

- t-test : If population standard deviations are unknown. and sample size is small than t-test statistic is more appropriate.

- Chi-square test : Chi-square test is used for categorical data or for testing independence in contingency tables

- F-test : F-test is often used in analysis of variance (ANOVA) to compare variances or test the equality of means across multiple groups.

We have a smaller dataset, So, T-test is more appropriate to test our hypothesis.

T-statistic is a measure of the difference between the means of two groups relative to the variability within each group. It is calculated as the difference between the sample means divided by the standard error of the difference. It is also known as the t-value or t-score.

Step 5 – Comparing Test Statistic:

In this stage, we decide where we should accept the null hypothesis or reject the null hypothesis. There are two ways to decide where we should accept or reject the null hypothesis.

Method A: Using Crtical values

Comparing the test statistic and tabulated critical value we have,

- If Test Statistic>Critical Value: Reject the null hypothesis.

- If Test Statistic≤Critical Value: Fail to reject the null hypothesis.

Note: Critical values are predetermined threshold values that are used to make a decision in hypothesis testing. To determine critical values for hypothesis testing, we typically refer to a statistical distribution table , such as the normal distribution or t-distribution tables based on.

Method B: Using P-values

We can also come to an conclusion using the p-value,

- If the p-value is less than or equal to the significance level i.e. ( [Tex]p\leq\alpha [/Tex] ), you reject the null hypothesis. This indicates that the observed results are unlikely to have occurred by chance alone, providing evidence in favor of the alternative hypothesis.

- If the p-value is greater than the significance level i.e. ( [Tex]p\geq \alpha[/Tex] ), you fail to reject the null hypothesis. This suggests that the observed results are consistent with what would be expected under the null hypothesis.

Note : The p-value is the probability of obtaining a test statistic as extreme as, or more extreme than, the one observed in the sample, assuming the null hypothesis is true. To determine p-value for hypothesis testing, we typically refer to a statistical distribution table , such as the normal distribution or t-distribution tables based on.

Step 7- Interpret the Results

At last, we can conclude our experiment using method A or B.

Calculating test statistic

To validate our hypothesis about a population parameter we use statistical functions . We use the z-score, p-value, and level of significance(alpha) to make evidence for our hypothesis for normally distributed data .

1. Z-statistics:

When population means and standard deviations are known.

[Tex]z = \frac{\bar{x} – \mu}{\frac{\sigma}{\sqrt{n}}}[/Tex]

- [Tex]\bar{x} [/Tex] is the sample mean,

- μ represents the population mean,

- σ is the standard deviation

- and n is the size of the sample.

2. T-Statistics

T test is used when n<30,

t-statistic calculation is given by:

[Tex]t=\frac{x̄-μ}{s/\sqrt{n}} [/Tex]

- t = t-score,

- x̄ = sample mean

- μ = population mean,

- s = standard deviation of the sample,

- n = sample size

3. Chi-Square Test

Chi-Square Test for Independence categorical Data (Non-normally distributed) using:

[Tex]\chi^2 = \sum \frac{(O_{ij} – E_{ij})^2}{E_{ij}}[/Tex]

- [Tex]O_{ij}[/Tex] is the observed frequency in cell [Tex]{ij} [/Tex]

- i,j are the rows and columns index respectively.

- [Tex]E_{ij}[/Tex] is the expected frequency in cell [Tex]{ij}[/Tex] , calculated as : [Tex]\frac{{\text{{Row total}} \times \text{{Column total}}}}{{\text{{Total observations}}}}[/Tex]

Let’s examine hypothesis testing using two real life situations,

Case A: D oes a New Drug Affect Blood Pressure?

Imagine a pharmaceutical company has developed a new drug that they believe can effectively lower blood pressure in patients with hypertension. Before bringing the drug to market, they need to conduct a study to assess its impact on blood pressure.

- Before Treatment: 120, 122, 118, 130, 125, 128, 115, 121, 123, 119

- After Treatment: 115, 120, 112, 128, 122, 125, 110, 117, 119, 114

Step 1 : Define the Hypothesis

- Null Hypothesis : (H 0 )The new drug has no effect on blood pressure.

- Alternate Hypothesis : (H 1 )The new drug has an effect on blood pressure.

Step 2: Define the Significance level

Let’s consider the Significance level at 0.05, indicating rejection of the null hypothesis.

If the evidence suggests less than a 5% chance of observing the results due to random variation.

Step 3 : Compute the test statistic

Using paired T-test analyze the data to obtain a test statistic and a p-value.

The test statistic (e.g., T-statistic) is calculated based on the differences between blood pressure measurements before and after treatment.

t = m/(s/√n)

- m = mean of the difference i.e X after, X before

- s = standard deviation of the difference (d) i.e d i = X after, i − X before,

- n = sample size,

then, m= -3.9, s= 1.8 and n= 10

we, calculate the , T-statistic = -9 based on the formula for paired t test

Step 4: Find the p-value

The calculated t-statistic is -9 and degrees of freedom df = 9, you can find the p-value using statistical software or a t-distribution table.

thus, p-value = 8.538051223166285e-06

Step 5: Result

- If the p-value is less than or equal to 0.05, the researchers reject the null hypothesis.

- If the p-value is greater than 0.05, they fail to reject the null hypothesis.

Conclusion: Since the p-value (8.538051223166285e-06) is less than the significance level (0.05), the researchers reject the null hypothesis. There is statistically significant evidence that the average blood pressure before and after treatment with the new drug is different.

Python Implementation of Case A

Let’s create hypothesis testing with python, where we are testing whether a new drug affects blood pressure. For this example, we will use a paired T-test. We’ll use the scipy.stats library for the T-test.

Scipy is a mathematical library in Python that is mostly used for mathematical equations and computations.

We will implement our first real life problem via python,

T-statistic (from scipy): -9.0 P-value (from scipy): 8.538051223166285e-06 T-statistic (calculated manually): -9.0 Decision: Reject the null hypothesis at alpha=0.05. Conclusion: There is statistically significant evidence that the average blood pressure before and after treatment with the new drug is different.

In the above example, given the T-statistic of approximately -9 and an extremely small p-value, the results indicate a strong case to reject the null hypothesis at a significance level of 0.05.

- The results suggest that the new drug, treatment, or intervention has a significant effect on lowering blood pressure.

- The negative T-statistic indicates that the mean blood pressure after treatment is significantly lower than the assumed population mean before treatment.

Case B : Cholesterol level in a population

Data: A sample of 25 individuals is taken, and their cholesterol levels are measured.

Cholesterol Levels (mg/dL): 205, 198, 210, 190, 215, 205, 200, 192, 198, 205, 198, 202, 208, 200, 205, 198, 205, 210, 192, 205, 198, 205, 210, 192, 205.

Populations Mean = 200

Population Standard Deviation (σ): 5 mg/dL(given for this problem)

Step 1: Define the Hypothesis

- Null Hypothesis (H 0 ): The average cholesterol level in a population is 200 mg/dL.

- Alternate Hypothesis (H 1 ): The average cholesterol level in a population is different from 200 mg/dL.

As the direction of deviation is not given , we assume a two-tailed test, and based on a normal distribution table, the critical values for a significance level of 0.05 (two-tailed) can be calculated through the z-table and are approximately -1.96 and 1.96.

The test statistic is calculated by using the z formula Z = [Tex](203.8 – 200) / (5 \div \sqrt{25}) [/Tex] and we get accordingly , Z =2.039999999999992.

Step 4: Result

Since the absolute value of the test statistic (2.04) is greater than the critical value (1.96), we reject the null hypothesis. And conclude that, there is statistically significant evidence that the average cholesterol level in the population is different from 200 mg/dL

Python Implementation of Case B

Reject the null hypothesis. There is statistically significant evidence that the average cholesterol level in the population is different from 200 mg/dL.

Although hypothesis testing is a useful technique in data science , it does not offer a comprehensive grasp of the topic being studied.

- Lack of Comprehensive Insight : Hypothesis testing in data science often focuses on specific hypotheses, which may not fully capture the complexity of the phenomena being studied.

- Dependence on Data Quality : The accuracy of hypothesis testing results relies heavily on the quality of available data. Inaccurate data can lead to incorrect conclusions, particularly in hypothesis testing in machine learning .

- Overlooking Patterns : Sole reliance on hypothesis testing can result in the omission of significant patterns or relationships in the data that are not captured by the tested hypotheses.

- Contextual Limitations : Hypothesis testing in statistics may not reflect the broader context, leading to oversimplification of results.

- Complementary Methods Needed : To gain a more holistic understanding, it’s essential to complement hypothesis testing with other analytical approaches, especially in data analytics and data mining .

- Misinterpretation Risks : Poorly formulated hypotheses or inappropriate statistical methods can lead to misinterpretation, emphasizing the need for careful consideration in hypothesis testing in Python and related analyses.

- Multiple Hypothesis Testing Challenges : Multiple hypothesis testing in machine learning poses additional challenges, as it can increase the likelihood of Type I errors, requiring adjustments to maintain validity.

Hypothesis testing is a cornerstone of statistical analysis , allowing data scientists to navigate uncertainties and draw credible inferences from sample data. By defining null and alternative hypotheses, selecting significance levels, and employing statistical tests, researchers can validate their assumptions effectively.

This article emphasizes the distinction between Type I and Type II errors, highlighting their relevance in hypothesis testing in data science and machine learning . A practical example involving a paired T-test to assess a new drug’s effect on blood pressure underscores the importance of statistical rigor in data-driven decision-making .

Ultimately, understanding hypothesis testing in statistics , alongside its applications in data mining , data analytics , and hypothesis testing in Python , enhances analytical frameworks and supports informed decision-making.

Understanding Hypothesis Testing- FAQs

What is hypothesis testing in data science.

In data science, hypothesis testing is used to validate assumptions or claims about data. It helps data scientists determine whether observed patterns are statistically significant or could have occurred by chance.

How does hypothesis testing work in machine learning?

In machine learning, hypothesis testing helps assess the effectiveness of models. For example, it can be used to compare the performance of different algorithms or to evaluate whether a new feature significantly improves a model’s accuracy.

What is hypothesis testing in ML?

Statistical method to evaluate the performance and validity of machine learning models. Tests specific hypotheses about model behavior, like whether features influence predictions or if a model generalizes well to unseen data.

What is the difference between Pytest and hypothesis in Python?

Pytest purposes general testing framework for Python code while Hypothesis is a Property-based testing framework for Python, focusing on generating test cases based on specified properties of the code.

What is the difference between hypothesis testing and data mining?

Hypothesis testing focuses on evaluating specific claims or hypotheses about a dataset, while data mining involves exploring large datasets to discover patterns, relationships, or insights without predefined hypotheses.

How is hypothesis generation used in business analytics?

In business analytics , hypothesis generation involves formulating assumptions or predictions based on available data. These hypotheses can then be tested using statistical methods to inform decision-making and strategy.

What is the significance level in hypothesis testing?

The significance level, often denoted as alpha (α), is the threshold for deciding whether to reject the null hypothesis. Common significance levels are 0.05, 0.01, and 0.10, indicating the probability of making a Type I error in statistical hypothesis testing .

Similar Reads

- Data Science

- data-science

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Hypothesis in Machine Learning

October 30, 2024

Anshuman Singh

Latest articles

What are the main components of data science, how to learn data science from scratch, covariance and correlation, how to become a data scientist in india, etl (extract, transform, load), 32 best data science books, data science vs computer science: key differences, descriptive statistics: definition, types, examples.

Machine learning involves building models that learn from data to make predictions or decisions. A hypothesis plays a crucial role in this process by serving as a candidate solution or function that maps input data to desired outputs. Essentially, a hypothesis is an assumption made by the learning algorithm about the relationship between features (input data) and target values (output). The goal of machine learning is to find the best hypothesis that performs well on unseen data.

This article will explore the concept of hypothesis in machine learning, covering its formulation, representation, evaluation, and importance in building reliable models. We will also differentiate between hypotheses in machine learning and statistical hypothesis testing, offering a clear understanding of how these concepts differ.

What is Hypothesis?

In simple terms, a hypothesis is an assumption or a possible solution that explains the relationship between inputs and outputs in a model. It’s like a mathematical function that predicts the target value (output) for given input data.

In machine learning, the hypothesis is different from a statistical hypothesis .

- It is a function learned by the model to map input data to predicted outputs.

- For example, in a linear regression model, the hypothesis might be: y=mx+c, where mmm and ccc are parameters learned during training.

- This refers to an assumption about a population parameter, like testing whether two means are equal.

While both involve assumptions, in machine learning, the hypothesis focuses on learning patterns from data, whereas in statistics, it focuses on testing assumptions about data.

How does a Hypothesis work?

In machine learning, a hypothesis functions as a possible mapping between input data and output values. The process of selecting and refining a hypothesis involves several steps and concepts:

- The hypothesis space is the set of all possible hypotheses (functions) the algorithm can choose from. For example, in linear regression, the space includes all linear equations of the form y=mx+cy = mx + cy=mx+c.

- Each learning algorithm defines its own hypothesis space, such as decision trees , neural networks , or linear models .

- A hypothesis is selected based on the given data and the type of learning algorithm.

- For example, a neural network might formulate a complex function with multiple hidden layers, while a decision tree divides the input space into distinct regions based on conditions.

- The algorithm searches through the hypothesis space to find the hypothesis that performs best on the training data. This is done by minimizing errors using methods like gradient descent.

- The goal is to identify the hypothesis that not only fits the training data but also generalizes well to unseen data.

Hypothesis Space and Representation in Machine Learning

The hypothesis space refers to the collection of all possible hypotheses (functions) that a learning algorithm can select from to solve a problem. This space defines the boundaries within which the model searches for the best hypothesis to fit the data.

1. Hypothesis Space Definition

- In mathematical terms, it is denoted as H, where each element in H is a possible hypothesis. For example, in linear regression, the space includes all linear functions like y=mx+c.

2. Types of Hypothesis Spaces Based on Algorithms

- Linear Models: The hypothesis space contains linear equations that map input features to output values.

- Decision Trees: The space includes different ways of splitting data to form a tree, with each tree structure representing a unique hypothesis.

- Neural Networks: The space consists of various architectures (e.g., different numbers of layers or nodes), with each configuration being a potential hypothesis.

3. Balancing Complexity and Simplicity:

- A larger hypothesis space provides flexibility but increases the risk of overfitting . Conversely, a smaller hypothesis space may limit the model’s performance and lead to underfitting .

- The choice of hypothesis space is closely related to model selection , as it influences how well the model can capture patterns in data.

Hypothesis Formulation and Representation in Machine Learning

The process of formulating a hypothesis involves defining a function that reflects the relationship between input data and output predictions. This formulation is influenced by the nature of the problem, the dataset, and the choice of learning algorithm.

1. Steps in Formulating a Hypothesis:

- Understand the Problem: Identify the type of task—classification, regression, or clustering.

- Select an Algorithm: Choose an appropriate algorithm (e.g., linear regression for predicting continuous values or decision trees for classification).

- For example, in linear regression: y=mx+c

- In a decision tree, the hypothesis takes the form of conditional rules (e.g., “If income > 50K, then class = high”).

2. Role of Model Selection:

- Choosing the right model helps define the hypothesis space. Models like support vector machines , neural networks , or k-nearest neighbors use different types of functions as hypotheses.

3. Hyperparameter Tuning in Formulating Hypotheses:

- Hyperparameters, such as learning rate or tree depth , affect the hypothesis by controlling how the model learns patterns. Proper tuning ensures that the selected hypothesis generalizes well to new data.

Hypothesis Evaluation

Once a hypothesis is formulated, it must be evaluated to determine how well it fits the data. This evaluation helps assess whether the chosen hypothesis is appropriate for the task and if it generalizes well to unseen data.

1. Role of Loss Function:

- A loss function measures the difference between the predicted output and the actual output.

- Mean Squared Error (MSE): Used for regression tasks to measure average squared differences between predictions and actual values.

- Cross-Entropy Loss: Applied in classification tasks to assess the difference between predicted probabilities and actual labels.

2. Performance Metrics:

- Accuracy: For classification tasks.

- Precision and Recall: For imbalanced datasets.

- R² Score: For regression models to measure how well predictions align with actual data.

3. Iterative Improvement:

- If the initial hypothesis does not perform well, the learning algorithm iteratively updates it to minimize the loss. This is done through optimization techniques like gradient descent .

Hypothesis Testing and Generalization

In machine learning, testing a hypothesis is crucial to ensure the model not only fits the training data but also performs well on new, unseen data. This concept is closely tied to generalization —the ability of a model to maintain performance across different datasets.

1. Overfitting and Underfitting:

- Overfitting: The model learns patterns, including noise, from the training data, leading to poor performance on new data.

- Underfitting: The model is too simplistic and fails to capture the underlying patterns in the data, resulting in low accuracy on both training and test data.

2. Techniques to Avoid Overfitting and Underfitting:

- Regularization: Adds penalties to the model’s complexity to prevent overfitting (e.g., L1 and L2 regularization).

- Cross-Validation: Splits the dataset into multiple subsets to ensure the model generalizes well to unseen data.

3. The Importance of Generalization:

- A hypothesis that generalizes well offers reliable predictions and works effectively in real-world scenarios.

- Learning algorithms aim to strike a balance between underfitting and overfitting, ensuring the model learns meaningful patterns without becoming too complex.

Hypothesis in Statistics

In statistics, a hypothesis refers to an assumption or claim about a population parameter. Unlike the hypothesis in machine learning, which focuses on making predictions, statistical hypotheses are tested to determine whether they hold true based on sample data.

- It states that there is no significant difference or relationship between variables (e.g., “There is no difference in the average heights of men and women”).

- It contradicts the null hypothesis and suggests that there is a significant difference or relationship (e.g., “The average height of men is different from that of women”).

How Hypothesis Testing Differs from Machine Learning Hypothesis Evaluation:

- Involves accepting or rejecting the null hypothesis based on evidence from sample data.

- Uses p-values and significance levels to determine whether the results are statistically significant.

- Focuses on finding the best hypothesis (model function) that maps inputs to outputs.

- Evaluates performance using loss functions and cross-validation rather than statistical significance.

Significance Level

In statistical hypothesis testing, the significance level (denoted as α ) is the threshold used to determine whether to reject the null hypothesis. It indicates the probability of rejecting the null hypothesis when it is actually true (Type I error).

- 0.05 (5%) : This is the most commonly used level, meaning there is a 5% risk of incorrectly rejecting the null hypothesis.

- 0.01 (1%) : Used in cases where stronger evidence is required.

- 0.10 (10%) : In some exploratory studies, a higher significance level is accepted.

How Significance Level Works:

- If the p-value (calculated from the test) is less than or equal to the significance level (α) , the null hypothesis is rejected, indicating the result is statistically significant.

- If the p-value is greater than α, there is not enough evidence to reject the null hypothesis.

The p-value is a crucial concept in statistical hypothesis testing that helps determine the strength of the evidence against the null hypothesis (H₀) . It represents the probability of obtaining a test result at least as extreme as the one observed, assuming the null hypothesis is true.

- A small p-value (≤ α) indicates strong evidence against the null hypothesis, leading to its rejection.

- A large p-value (> α) suggests weak evidence against the null hypothesis, meaning we fail to reject it.

- If the significance level (α) is 0.05 and the p-value is 0.03 , the result is considered statistically significant, and we reject the null hypothesis.

- If the p-value is 0.07 , we do not reject the null hypothesis at the 0.05 significance level.

In machine learning, a hypothesis is a function that maps inputs to outputs. The process of formulating and evaluating hypotheses is key to building models that generalize well. Concepts like hypothesis space , overfitting , and loss functions guide the selection of the best hypothesis.

Unlike statistical hypothesis testing, which evaluates assumptions about data, machine learning hypotheses focus on learning patterns for prediction. Mastering these concepts ensures better model performance, making hypothesis formulation a crucial part of any machine learning project.

Featured articles

November 22, 2024

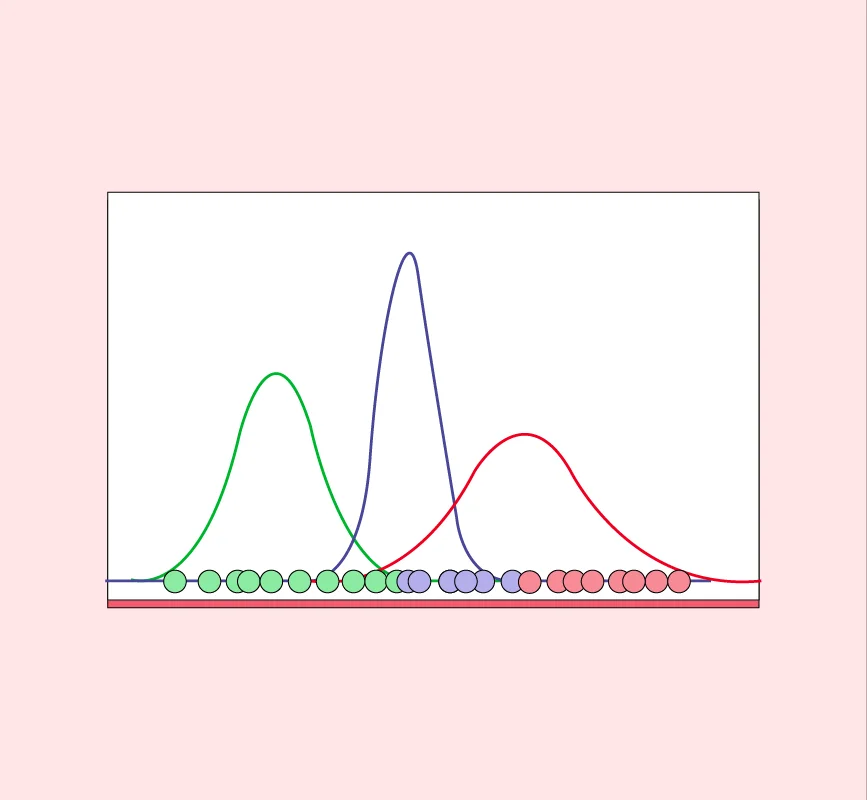

Gaussian Mixture Model in Machine Learning

Artificial Intelligence (AI) in Healthcare

Role of Artificial Intelligence in Education

Team Applied AI