Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Inferential Statistics | An Easy Introduction & Examples

Inferential Statistics | An Easy Introduction & Examples

Published on September 4, 2020 by Pritha Bhandari . Revised on June 22, 2023.

While descriptive statistics summarize the characteristics of a data set, inferential statistics help you come to conclusions and make predictions based on your data.

When you have collected data from a sample , you can use inferential statistics to understand the larger population from which the sample is taken.

Inferential statistics have two main uses:

- making estimates about populations (for example, the mean SAT score of all 11th graders in the US).

- testing hypotheses to draw conclusions about populations (for example, the relationship between SAT scores and family income).

Table of contents

Descriptive versus inferential statistics, estimating population parameters from sample statistics, hypothesis testing, other interesting articles, frequently asked questions about inferential statistics.

Descriptive statistics allow you to describe a data set, while inferential statistics allow you to make inferences based on a data set.

- Descriptive statistics

Using descriptive statistics, you can report characteristics of your data:

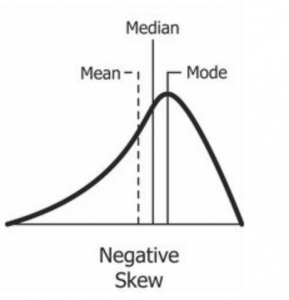

- The distribution concerns the frequency of each value.

- The central tendency concerns the averages of the values.

- The variability concerns how spread out the values are.

In descriptive statistics, there is no uncertainty – the statistics precisely describe the data that you collected. If you collect data from an entire population, you can directly compare these descriptive statistics to those from other populations.

Inferential statistics

Most of the time, you can only acquire data from samples, because it is too difficult or expensive to collect data from the whole population that you’re interested in.

While descriptive statistics can only summarize a sample’s characteristics, inferential statistics use your sample to make reasonable guesses about the larger population.

With inferential statistics, it’s important to use random and unbiased sampling methods . If your sample isn’t representative of your population, then you can’t make valid statistical inferences or generalize .

Sampling error in inferential statistics

Since the size of a sample is always smaller than the size of the population, some of the population isn’t captured by sample data. This creates sampling error , which is the difference between the true population values (called parameters) and the measured sample values (called statistics).

Sampling error arises any time you use a sample, even if your sample is random and unbiased. For this reason, there is always some uncertainty in inferential statistics. However, using probability sampling methods reduces this uncertainty.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

The characteristics of samples and populations are described by numbers called statistics and parameters :

- A statistic is a measure that describes the sample (e.g., sample mean ).

- A parameter is a measure that describes the whole population (e.g., population mean).

Sampling error is the difference between a parameter and a corresponding statistic. Since in most cases you don’t know the real population parameter, you can use inferential statistics to estimate these parameters in a way that takes sampling error into account.

There are two important types of estimates you can make about the population: point estimates and interval estimates .

- A point estimate is a single value estimate of a parameter. For instance, a sample mean is a point estimate of a population mean.

- An interval estimate gives you a range of values where the parameter is expected to lie. A confidence interval is the most common type of interval estimate.

Both types of estimates are important for gathering a clear idea of where a parameter is likely to lie.

Confidence intervals

A confidence interval uses the variability around a statistic to come up with an interval estimate for a parameter. Confidence intervals are useful for estimating parameters because they take sampling error into account.

While a point estimate gives you a precise value for the parameter you are interested in, a confidence interval tells you the uncertainty of the point estimate. They are best used in combination with each other.

Each confidence interval is associated with a confidence level. A confidence level tells you the probability (in percentage) of the interval containing the parameter estimate if you repeat the study again.

A 95% confidence interval means that if you repeat your study with a new sample in exactly the same way 100 times, you can expect your estimate to lie within the specified range of values 95 times.

Although you can say that your estimate will lie within the interval a certain percentage of the time, you cannot say for sure that the actual population parameter will. That’s because you can’t know the true value of the population parameter without collecting data from the full population.

However, with random sampling and a suitable sample size, you can reasonably expect your confidence interval to contain the parameter a certain percentage of the time.

Your point estimate of the population mean paid vacation days is the sample mean of 19 paid vacation days.

Hypothesis testing is a formal process of statistical analysis using inferential statistics. The goal of hypothesis testing is to compare populations or assess relationships between variables using samples.

Hypotheses , or predictions, are tested using statistical tests . Statistical tests also estimate sampling errors so that valid inferences can be made.

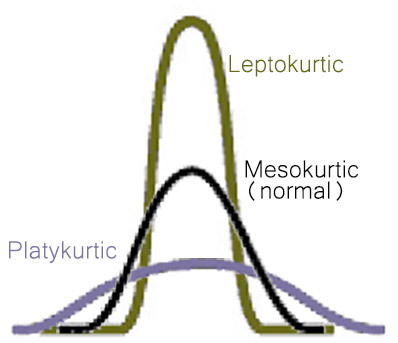

Statistical tests can be parametric or non-parametric. Parametric tests are considered more statistically powerful because they are more likely to detect an effect if one exists.

Parametric tests make assumptions that include the following:

- the population that the sample comes from follows a normal distribution of scores

- the sample size is large enough to represent the population

- the variances , a measure of variability , of each group being compared are similar

When your data violates any of these assumptions, non-parametric tests are more suitable. Non-parametric tests are called “distribution-free tests” because they don’t assume anything about the distribution of the population data.

Statistical tests come in three forms: tests of comparison, correlation or regression.

Comparison tests

Comparison tests assess whether there are differences in means, medians or rankings of scores of two or more groups.

To decide which test suits your aim, consider whether your data meets the conditions necessary for parametric tests, the number of samples, and the levels of measurement of your variables.

Means can only be found for interval or ratio data , while medians and rankings are more appropriate measures for ordinal data .

| test | Yes | Means | 2 samples |

|---|---|---|---|

| Yes | Means | 3+ samples | |

| Mood’s median | No | Medians | 2+ samples |

| Wilcoxon signed-rank | No | Distributions | 2 samples |

| Wilcoxon rank-sum (Mann-Whitney ) | No | Sums of rankings | 2 samples |

| Kruskal-Wallis | No | Mean rankings | 3+ samples |

Correlation tests

Correlation tests determine the extent to which two variables are associated.

Although Pearson’s r is the most statistically powerful test, Spearman’s r is appropriate for interval and ratio variables when the data doesn’t follow a normal distribution.

The chi square test of independence is the only test that can be used with nominal variables.

| Pearson’s | Yes | Interval/ratio variables |

|---|---|---|

| Spearman’s | No | Ordinal/interval/ratio variables |

| Chi square test of independence | No | Nominal/ordinal variables |

Regression tests

Regression tests demonstrate whether changes in predictor variables cause changes in an outcome variable. You can decide which regression test to use based on the number and types of variables you have as predictors and outcomes.

Most of the commonly used regression tests are parametric. If your data is not normally distributed, you can perform data transformations.

Data transformations help you make your data normally distributed using mathematical operations, like taking the square root of each value.

| 1 interval/ratio variable | 1 interval/ratio variable | |

| 2+ interval/ratio variable(s) | 1 interval/ratio variable | |

| Logistic regression | 1+ any variable(s) | 1 binary variable |

| Nominal regression | 1+ any variable(s) | 1 nominal variable |

| Ordinal regression | 1+ any variable(s) | 1 ordinal variable |

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Confidence interval

- Measures of central tendency

- Correlation coefficient

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

Prevent plagiarism. Run a free check.

Descriptive statistics summarize the characteristics of a data set. Inferential statistics allow you to test a hypothesis or assess whether your data is generalizable to the broader population.

A statistic refers to measures about the sample , while a parameter refers to measures about the population .

A sampling error is the difference between a population parameter and a sample statistic .

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). Inferential Statistics | An Easy Introduction & Examples. Scribbr. Retrieved August 28, 2024, from https://www.scribbr.com/statistics/inferential-statistics/

Is this article helpful?

Pritha Bhandari

Other students also liked, parameter vs statistic | definitions, differences & examples, descriptive statistics | definitions, types, examples, hypothesis testing | a step-by-step guide with easy examples, what is your plagiarism score.

An Introduction to Inferential Analysis in Qualitative Research

January 10, 2021

By Aidan Gray

When conducting qualitative research, an researcher may adopt an inferential or deductive approach. For example, research questionnaires are primarily used as a means to obtain data on customer satisfaction or level of knowledge about a particular topic. The questionnaires themselves are not necessarily qualitative, but are descriptive of a given set of facts (usually referred to as “observational data” or “subjective data”). However, the questionnaires are designed to answer specific questions that will provide the researcher with data to support a central claim. If the data does not support the claimed conclusion, then the researcher should reject the theory, but if the data does support the conclusion, the researcher should use that conclusion to support a thesis.

Research theories, however, are not a pure, monolithic category. They can be of many different types. In research methodology, the theories are descriptive and predictive of the actual empirical results of research efforts. When this is done, the researcher is said to have conducted a “lull theory”, in reference to the fact that when people are at a relaxed state, their answers tend to reflect reality more closely than answers they would give while at work or in school.

Another type of theory in research methodology is descriptive data theory. This refers to methods of testing a hypothesis by examining a large number of the facts that are independent of the original study and using those facts to construct a hypothesis about the original data. More specifically, this would be used to test the generalizability of the theory. It is often called a falsification theory because it attempts to verify the original hypothesis.

Another method called measurement theory is popularly used in research methodology. It is best explained as a way to test the generalizability of a research method. The purpose of measuring is to provide quantitative proof that the original, descriptive method is sound. For instance, a researcher conducting an experiment may choose to use a t-test or a chi-square test. Both of these methods are considered to be valid testing methods when compared to null results.

Inferential Approach In Research

Another important tool used in qualitative research is questionnaires. These questionnaires allow a researcher to obtain information from a large number of people, many of which are likely non-relevant to the topic being investigated. For example, a survey might be designed to investigate the relationships between smoking and weight. In this case, the questions would likely address things like demographics, beliefs about smoking and weight and various other factors that directly affect smoking prevalence. Questionnaires can also be used to investigate if certain behaviors affect people in different ways and to find out if there is consistency within groups concerning those behaviors.

Most research questionnaires, however, fall under the more descriptive category. These questionnaires are designed to gather data that will support the main topic of the research. Some examples include surveys on organizational behavior, attitudes toward sexuality and the HIV epidemic among others. These questionnaires are also typically longer than those used in clinical research. For example, an organizational survey might last up to 8 pages while a questionnaire for a clinical trial could be lengthy as well as drawn from a variety of sources.

Other forms of quantitative research rely heavily on descriptive analysis and statistical measures. For example, studies about student drinking and driving have to make sure that they have appropriate sampling tools and that their questionnaires and methodology are accurate. Demographics must be collected to accurately determine where the focus of a given study fits within a population. This type of research can also depend heavily on the use of statistical measures and analysis.

When a qualitative researcher resorts to the inferential approach, they generally are doing so because they do not have an exact idea of the answer that would result from a directed question or a graphical representation. The inferential approach allows them to infer a probability based on the information that is available to them. In most cases, the researcher uses statistical methods and data to come to a conclusion. If they choose to rely solely on the descriptive aspects of the topic they are researching, then they are limiting their potential to provide quantitative proof. Qualitative researchers must then follow certain rules in order to use statistics and other empirical measures in a way that helps them draw conclusions about a topic.

- Recent Posts

- IVR Optimization Strategies - July 17, 2024

- Custom React JS Development for Enterprises - July 12, 2024

- The Role of Photochemistry Reactors in Chemical Reactions - May 7, 2024

Continue Reading

- What Is Simulation Analysis in Finance?

- Risk Management - How to Apply Simulation Analysis in Risk Management

- What Is Simulation Theory?

- The Importance of Software Simulation Examples

- Examples of Scenario Analysis Examples

Privacy Overview

| Cookie | Duration | Description |

|---|---|---|

| cookielawinfo-checkbox-analytics | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Analytics". |

| cookielawinfo-checkbox-functional | 11 months | The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-others | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Other. |

| cookielawinfo-checkbox-performance | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Performance". |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |

- Privacy Policy

Home » Inferential Statistics – Types, Methods and Examples

Inferential Statistics – Types, Methods and Examples

Table of Contents

Inferential Statistics

Inferential statistics is a branch of statistics that involves making predictions or inferences about a population based on a sample of data taken from that population. It is used to analyze the probabilities, assumptions, and outcomes of a hypothesis .

The basic steps of inferential statistics typically involve the following:

- Define a Hypothesis: This is often a statement about a parameter of a population, such as the population mean or population proportion.

- Select a Sample: In order to test the hypothesis, you’ll select a sample from the population. This should be done randomly and should be representative of the larger population in order to avoid bias.

- Collect Data: Once you have your sample, you’ll need to collect data. This data will be used to calculate statistics that will help you test your hypothesis.

- Perform Analysis: The collected data is then analyzed using statistical tests such as the t-test, chi-square test, or ANOVA, to name a few. These tests help to determine the likelihood that the results of your analysis occurred by chance.

- Interpret Results: The analysis can provide a probability, called a p-value, which represents the likelihood that the results occurred by chance. If this probability is below a certain level (commonly 0.05), you may reject the null hypothesis (the statement that there is no effect or relationship) in favor of the alternative hypothesis (the statement that there is an effect or relationship).

Inferential Statistics Types

Inferential statistics can be broadly categorized into two types: parametric and nonparametric. The selection of type depends on the nature of the data and the purpose of the analysis.

Parametric Inferential Statistics

These are statistical methods that assume data comes from a type of probability distribution and makes inferences about the parameters of the distribution. Common parametric methods include:

- T-tests : Used when comparing the means of two groups to see if they’re significantly different.

- Analysis of Variance (ANOVA) : Used to compare the means of more than two groups.

- Regression Analysis : Used to predict the value of one variable (dependent) based on the value of another variable (independent).

- Chi-square test for independence : Used to test if there is a significant association between two categorical variables.

- Pearson’s correlation : Used to test if there is a significant linear relationship between two continuous variables.

Nonparametric Inferential Statistics

These are methods used when the data does not meet the requirements necessary to use parametric statistics, such as when data is not normally distributed. Common nonparametric methods include:

- Mann-Whitney U Test : Non-parametric equivalent to the independent samples t-test.

- Wilcoxon Signed-Rank Test : Non-parametric equivalent to the paired samples t-test.

- Kruskal-Wallis Test : Non-parametric equivalent to the one-way ANOVA.

- Spearman’s rank correlation : Non-parametric equivalent to the Pearson correlation.

- Chi-square test for goodness of fit : Used to test if the observed frequencies for a categorical variable match the expected frequencies.

Inferential Statistics Formulas

Inferential statistics use various formulas and statistical tests to draw conclusions or make predictions about a population based on a sample from that population. Here are a few key formulas commonly used:

Confidence Interval for a Mean:

When you have a sample and want to make an inference about the population mean (µ), you might use a confidence interval.

The formula for a confidence interval around a mean is:

[Sample Mean] ± [Z-score or T-score] * (Standard Deviation / sqrt[n]) where:

- Sample Mean is the mean of your sample data

- Z-score or T-score is the value from the Z or T distribution corresponding to the desired confidence level (Z is used when the population standard deviation is known or the sample size is large, otherwise T is used)

- Standard Deviation is the standard deviation of the sample

- sqrt[n] is the square root of the sample size

Hypothesis Testing:

Hypothesis testing often involves calculating a test statistic, which is then compared to a critical value to decide whether to reject the null hypothesis.

A common test statistic for a test about a mean is the Z-score:

Z = (Sample Mean - Hypothesized Population Mean) / (Standard Deviation / sqrt[n])

where all variables are as defined above.

Chi-Square Test:

The Chi-Square Test is used when dealing with categorical data.

The formula is:

χ² = Σ [ (Observed-Expected)² / Expected ]

- Observed is the actual observed frequency

- Expected is the frequency we would expect if the null hypothesis were true

The t-test is used to compare the means of two groups. The formula for the independent samples t-test is:

t = (mean1 - mean2) / sqrt [ (sd1²/n1) + (sd2²/n2) ] where:

- mean1 and mean2 are the sample means

- sd1 and sd2 are the sample standard deviations

- n1 and n2 are the sample sizes

Inferential Statistics Examples

Sure, inferential statistics are used when making predictions or inferences about a population from a sample of data. Here are a few real-time examples:

- Medical Research: Suppose a pharmaceutical company is developing a new drug and they’re currently in the testing phase. They gather a sample of 1,000 volunteers to participate in a clinical trial. They find that 700 out of these 1,000 volunteers reported a significant reduction in their symptoms after taking the drug. Using inferential statistics, they can infer that the drug would likely be effective for the larger population.

- Customer Satisfaction: Suppose a restaurant wants to know if its customers are satisfied with their food. They could survey a sample of their customers and ask them to rate their satisfaction on a scale of 1 to 10. If the average rating was 8.5 from a sample of 200 customers, they could use inferential statistics to infer that the overall customer population is likely satisfied with the food.

- Political Polling: A polling company wants to predict who will win an upcoming presidential election. They poll a sample of 10,000 eligible voters and find that 55% prefer Candidate A, while 45% prefer Candidate B. Using inferential statistics, they infer that Candidate A has a higher likelihood of winning the election.

- E-commerce Trends: An e-commerce company wants to improve its recommendation engine. They analyze a sample of customers’ purchase history and notice a trend that customers who buy kitchen appliances also frequently buy cookbooks. They use inferential statistics to infer that recommending cookbooks to customers who buy kitchen appliances would likely increase sales.

- Public Health: A health department wants to assess the impact of a health awareness campaign on smoking rates. They survey a sample of residents before and after the campaign. If they find a significant reduction in smoking rates among the surveyed group, they can use inferential statistics to infer that the campaign likely had an impact on the larger population’s smoking habits.

Applications of Inferential Statistics

Inferential statistics are extensively used in various fields and industries to make decisions or predictions based on data. Here are some applications of inferential statistics:

- Healthcare: Inferential statistics are used in clinical trials to analyze the effect of a treatment or a drug on a sample population and then infer the likely effect on the general population. This helps in the development and approval of new treatments and drugs.

- Business: Companies use inferential statistics to understand customer behavior and preferences, market trends, and to make strategic decisions. For example, a business might sample customer satisfaction levels to infer the overall satisfaction of their customer base.

- Finance: Banks and financial institutions use inferential statistics to evaluate the risk associated with loans and investments. For example, inferential statistics can help in determining the risk of default by a borrower based on the analysis of a sample of previous borrowers with similar credit characteristics.

- Quality Control: In manufacturing, inferential statistics can be used to maintain quality standards. By analyzing a sample of the products, companies can infer the quality of all products and decide whether the manufacturing process needs adjustments.

- Social Sciences: In fields like psychology, sociology, and education, researchers use inferential statistics to draw conclusions about populations based on studies conducted on samples. For instance, a psychologist might use a survey of a sample of people to infer the prevalence of a particular psychological trait or disorder in a larger population.

- Environment Studies: Inferential statistics are also used to study and predict environmental changes and their impact. For instance, researchers might measure pollution levels in a sample of locations to infer overall pollution levels in a wider area.

- Government Policies: Governments use inferential statistics in policy-making. By analyzing sample data, they can infer the potential impacts of policies on the broader population and thus make informed decisions.

Purpose of Inferential Statistics

The purposes of inferential statistics include:

- Estimation of Population Parameters: Inferential statistics allows for the estimation of population parameters. This means that it can provide estimates about population characteristics based on sample data. For example, you might want to estimate the average weight of all men in a country by sampling a smaller group of men.

- Hypothesis Testing: Inferential statistics provides a framework for testing hypotheses. This involves making an assumption (the null hypothesis) and then testing this assumption to see if it should be rejected or not. This process enables researchers to draw conclusions about population parameters based on their sample data.

- Prediction: Inferential statistics can be used to make predictions about future outcomes. For instance, a researcher might use inferential statistics to predict the outcomes of an election or forecast sales for a company based on past data.

- Relationships Between Variables: Inferential statistics can also be used to identify relationships between variables, such as correlation or regression analysis. This can provide insights into how different factors are related to each other.

- Generalization: Inferential statistics allows researchers to generalize their findings from the sample to the larger population. It helps in making broad conclusions, given that the sample is representative of the population.

- Variability and Uncertainty: Inferential statistics also deal with the idea of uncertainty and variability in estimates and predictions. Through concepts like confidence intervals and margins of error, it provides a measure of how confident we can be in our estimations and predictions.

- Error Estimation : It provides measures of possible errors (known as margins of error), which allow us to know how much our sample results may differ from the population parameters.

Limitations of Inferential Statistics

Inferential statistics, despite its many benefits, does have some limitations. Here are some of them:

- Sampling Error : Inferential statistics are often based on the concept of sampling, where a subset of the population is used to infer about the population. There’s always a chance that the sample might not perfectly represent the population, leading to sampling errors.

- Misleading Conclusions : If assumptions for statistical tests are not met, it could lead to misleading results. This includes assumptions about the distribution of data, homogeneity of variances, independence, etc.

- False Positives and Negatives : There’s always a chance of a Type I error (rejecting a true null hypothesis, or a false positive) or a Type II error (not rejecting a false null hypothesis, or a false negative).

- Dependence on Quality of Data : The accuracy and validity of inferential statistics depend heavily on the quality of data collected. If data are biased, inaccurate, or collected using flawed methods, the results won’t be reliable.

- Limited Predictive Power : While inferential statistics can provide estimates and predictions, these are based on the current data and may not fully account for future changes or variables not included in the model.

- Complexity : Some inferential statistical methods can be quite complex and require a solid understanding of statistical principles to implement and interpret correctly.

- Influenced by Outliers : Inferential statistics can be heavily influenced by outliers. If these extreme values aren’t handled properly, they can lead to misleading results.

- Over-reliance on P-values : There’s a tendency in some fields to overly rely on p-values to determine significance, even though p-values have several limitations and are often misunderstood.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Textual Analysis – Types, Examples and Guide

Discourse Analysis – Methods, Types and Examples

Grounded Theory – Methods, Examples and Guide

Narrative Analysis – Types, Methods and Examples

Regression Analysis – Methods, Types and Examples

Histogram – Types, Examples and Making Guide

Quant Analysis 101: Inferential Statistics

Everything You Need To Get Started (With Examples)

By: Derek Jansen (MBA) | Reviewers: Kerryn Warren (PhD) | October 2023

If you’re new to quantitative data analysis , one of the many terms you’re likely to hear being thrown around is inferential statistics. In this post, we’ll provide an introduction to inferential stats, using straightforward language and loads of examples .

Overview: Inferential Statistics

What are inferential statistics.

- Descriptive vs inferential statistics

Correlation

- Key takeaways

At the simplest level, inferential statistics allow you to test whether the patterns you observe in a sample are likely to be present in the population – or whether they’re just a product of chance.

In stats-speak, this “Is it real or just by chance?” assessment is known as statistical significance . We won’t go down that rabbit hole in this post, but this ability to assess statistical significance means that inferential statistics can be used to test hypotheses and in some cases, they can even be used to make predictions .

That probably sounds rather conceptual – let’s look at a practical example.

Let’s say you surveyed 100 people (this would be your sample) in a specific city about their favourite type of food. Reviewing the data, you found that 70 people selected pizza (i.e., 70% of the sample). You could then use inferential statistics to test whether that number is just due to chance , or whether it is likely representative of preferences across the entire city (this would be your population).

PS – you’d use a chi-square test for this example, but we’ll get to that a little later.

Inferential vs Descriptive

At this point, you might be wondering how inferentials differ from descriptive statistics. At the simplest level, descriptive statistics summarise and organise the data you already have (your sample), making it easier to understand.

Inferential statistics, on the other hand, allow you to use your sample data to assess whether the patterns contained within it are likely to be present in the broader population , and potentially, to make predictions about that population.

It’s example time again…

Let’s imagine you’re undertaking a study that explores shoe brand preferences among men and women. If you just wanted to identify the proportions of those who prefer different brands, you’d only require descriptive statistics .

However, if you wanted to assess whether those proportions differ between genders in the broader population (and that the difference is not just down to chance), you’d need to utilise inferential statistics .

In short, descriptive statistics describe your sample, while inferential statistics help you understand whether the patterns in your sample are likely to reflect within the population .

Let’s look at some inferential tests

Now that we’ve defined inferential statistics and explained how it differs from descriptive statistics, let’s take a look at some of the most common tests within the inferential realm . It’s worth highlighting upfront that there are many different types of inferential tests and this is most certainly not a comprehensive list – just an introductory list to get you started.

A t-test is a way to compare the means (averages) of two groups to see if they are meaningfully different, or if the difference is just by chance. In other words, to assess whether the difference is statistically significant . This is important because comparing two means side-by-side can be very misleading if one has a high variance and the other doesn’t (if this sounds like gibberish, check out our descriptive statistics post here ).

As an example, you might use a t-test to see if there’s a statistically significant difference between the exam scores of two mathematics classes taught by different teachers . This might then lead you to infer that one teacher’s teaching method is more effective than the other.

It’s worth noting that there are a few different types of t-tests . In this example, we’re referring to the independent t-test , which compares the means of two groups, as opposed to the mean of one group at different times (i.e., a paired t-test). Each of these tests has its own set of assumptions and requirements, as do all of the tests we’ll discuss here – but we’ll save assumptions for another post!

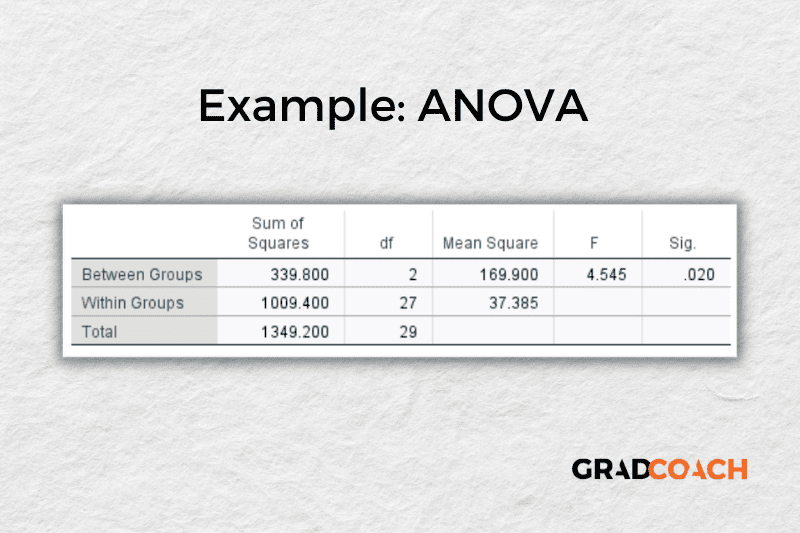

While a t-test compares the means of just two groups, an ANOVA (which stands for Analysis of Variance) can compare the means of more than two groups at once . Again, this helps you assess whether the differences in the means are statistically significant or simply a product of chance.

For example, if you want to know whether students’ test scores vary based on the type of school they attend – public, private, or homeschool – you could use ANOVA to compare the average standardised test scores of the three groups .

Similarly, you could use ANOVA to compare the average sales of a product across multiple stores. Based on this data, you could make an inference as to whether location is related to (affects) sales.

In these examples, we’re specifically referring to what’s called a one-way ANOVA , but as always, there are multiple types of ANOVAs for different applications. So, be sure to do your research before opting for any specific test.

While t-tests and ANOVAs test for differences in the means across groups, the Chi-square test is used to see if there’s a difference in the proportions of various categories . In stats speak, the Chi-square test assesses whether there’s a statistically significant relationship between two categorical variables (i.e., nominal or ordinal data). If you’re not familiar with these terms, check out our explainer video here .

As an example, you could use a Chi-square test to check if there’s a link between gender (e.g., male and female) and preference for a certain category of car (e.g., sedans or SUVs). Similarly, you could use this type of test to see if there’s a relationship between the type of breakfast people eat (cereal, toast, or nothing) and their university major (business, math or engineering).

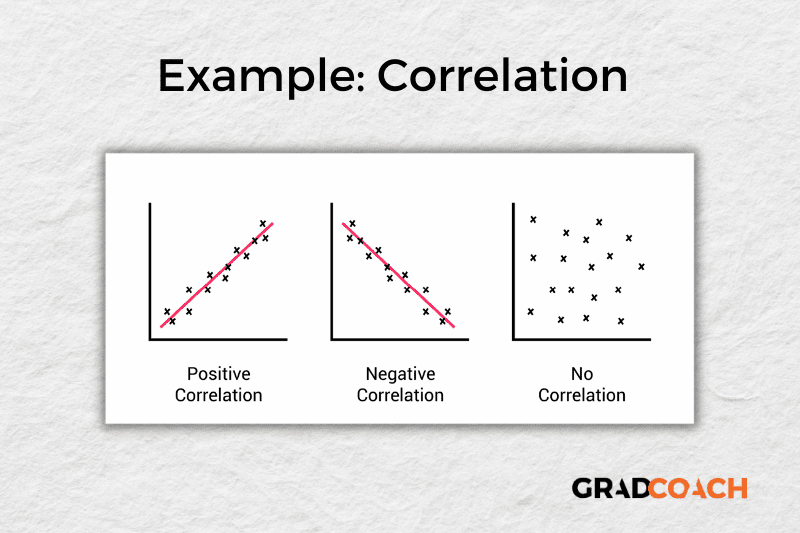

Correlation analysis looks at the relationship between two numerical variables (like height or weight) to assess whether they “move together” in some way. In stats-speak, correlation assesses whether a statistically significant relationship exists between two variables that are interval or ratio in nature .

For example, you might find a correlation between hours spent studying and exam scores. This would suggest that generally, the more hours people spend studying, the higher their scores are likely to be.

Similarly, a correlation analysis may reveal a negative relationship between time spent watching TV and physical fitness (represented by VO2 max levels), where the more time spent in front of the television, the lower the physical fitness level.

When running a correlation analysis, you’ll be presented with a correlation coefficient (also known as an r-value), which is a number between -1 and 1. A value close to 1 means that the two variables move in the same direction , while a number close to -1 means that they move in opposite directions . A correlation value of zero means there’s no clear relationship between the two variables.

What’s important to highlight here is that while correlation analysis can help you understand how two variables are related, it doesn’t prove that one causes the other . As the adage goes, correlation is not causation.

While correlation allows you to see whether there’s a relationship between two numerical variables, regression takes it a step further by allowing you to make predictions about the value of one variable (called the dependent variable) based on the value of one or more other variables (called the independent variables).

For example, you could use regression analysis to predict house prices based on the number of bedrooms, location, and age of the house. The analysis would give you an equation that lets you plug in these factors to estimate a house’s price. Similarly, you could potentially use regression analysis to predict a person’s weight based on their height, age, and daily calorie intake.

It’s worth noting that in these examples, we’ve been talking about multiple regression , as there are multiple independent variables. While this is a popular form of regression, there are many others, including simple linear, logistic and multivariate. As always, be sure to do your research before selecting a specific statistical test.

As with correlation, keep in mind that regression analysis alone doesn’t prove causation . While it can show that variables are related and help you make predictions, it can’t prove that one variable causes another to change. Other factors that you haven’t included in your model could be influencing the results. To establish causation, you’d typically need a very specific research design that allows you to control all (or at least most) variables.

Let’s Recap

We’ve covered quite a bit of ground. Here’s a quick recap of the key takeaways:

- Inferential stats allow you to assess whether patterns in your sample are likely to be present in your population

- Some common inferential statistical tests include t-tests, ANOVA, chi-square, correlation and regression .

- Inferential statistics alone do not prove causation . To identify and measure causal relationships, you need a very specific research design.

If you’d like 1-on-1 help with your inferential statistics, check out our private coaching service , where we hold your hand throughout the quantitative research process.

Psst… there’s more!

This post is an extract from our bestselling short course, Methodology Bootcamp . If you want to work smart, you don't want to miss this .

very important content

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Research Methods in Psychology

14. inferential statistics ¶.

The great tragedy of science - the slaying of a beautiful hypothesis by an ugly fact. —Thomas Huxley

Truth in science can be defined as the working hypothesis best suited to open the way to the next better one. —Konrad Lorenz

Recall that Matthias Mehl and his colleagues, in their study of sex differences in talkativeness, found that the women in their sample spoke a mean of 16,215 words per day and the men a mean of 15,669 words per day [MVRamirezE+07] . But despite observing this difference in their sample, they concluded that there was no evidence of a sex difference in talkativeness in the population. Recall also that Allen Kanner and his colleagues, in their study of the relationship between daily hassles and symptoms, found a correlation of 0.6 in their sample [KCSL81] . But they concluded that this finding implied a relationship between hassles and symptoms in the population. These examples raise questions about how researchers can draw conclusions about the population based on results from their sample.

To answer such questions, researchers use a set of techniques called inferential statistics, which is what this chapter is about. We focus, in particular, on null hypothesis testing, the most common approach to inferential statistics in psychological research. We begin with a conceptual overview of null hypothesis testing, including its purpose and basic logic. Then we look at several null hypothesis testing techniques that allow conclusions about differences between means and about correlations between quantitative variables. Finally, we consider a few other important ideas related to null hypothesis testing, including some that can be helpful in planning new studies and interpreting results. We also look at some long-standing criticisms of null hypothesis testing and some ways of dealing with these criticisms.

14.1. Understanding Null Hypothesis Testing ¶

14.1.1. learning objectives ¶.

Explain the purpose of null hypothesis testing, including the role of sampling error.

Describe the basic logic of null hypothesis testing.

Describe the role of relationship strength and sample size in determining statistical significance and make reasonable judgments about statistical significance based on these two factors.

14.1.2. The Purpose of Null Hypothesis Testing ¶

As we have seen, psychological research typically involves measuring one or more variables within a sample and computing descriptive statistics. In general, however, the researcher’s goal is not to draw conclusions about the participants in that sample, but rather to draw conclusions about the population from which those participants were selected. Thus, researchers must use sample statistics to draw conclusions about the corresponding values in the population. These corresponding values in the population are called parameters. Imagine, for example, that a researcher measures the number of depressive symptoms exhibited by each of 50 clinically depressed adults and computes the mean number of symptoms. The researcher probably wants to use this sample statistic (the mean number of symptoms for the sample) to draw conclusions about the corresponding population parameter (the mean number of symptoms for clinically depressed adults).

Unfortunately, sample statistics are not perfect estimates of their corresponding population parameters. This is because there is a certain amount of random variability in any statistic from sample to sample. The mean number of depressive symptoms might be 8.73 in one sample of clinically depressed adults, 6.45 in a second sample, and 9.44 in a third. This will happen even though these samples are randomly selected from the same population. Similarly, the correlation (e.g., Pearson’s r) between two variables might be 0.24 in one sample, -0.04 in a second sample, and 0.15 in a third. Again, this can and will happen even though these samples are selected randomly from the same population. This random variability in statistics calculated from sample to sample is called sampling error. Note that the term error here refers to the statistical notion of error, or random variability, and does not imply that anyone has made a mistake. No one “commits a sampling error”.

One implication of this is that when there is a statistical relationship in a sample, it is not always clear whether there is a statistical relationship in the population. A small difference between two group means in a sample might indicate that there is a small difference between the two group means in the population. But it could also be that there is no difference between the means in the population and that the difference in the sample is just a matter of sampling error. Similarly, a Pearson’s r value of -0.29 in a sample might mean that there is a negative relationship in the population. But it could also be that there is no relationship in the population and that the relationship in the sample is just a matter of sampling error.

In fact, any relationship observed in a sample can be interpreted in two ways:

There is a relationship in the population, and the relationship in the sample reflects this.

There is no relationship in the population, and the relationship in the sample reflects only sampling error.

The purpose of inferential statistics is simply to help researchers decide between these two interpretations.

14.1.3. The Logic of Null Hypothesis Testing ¶

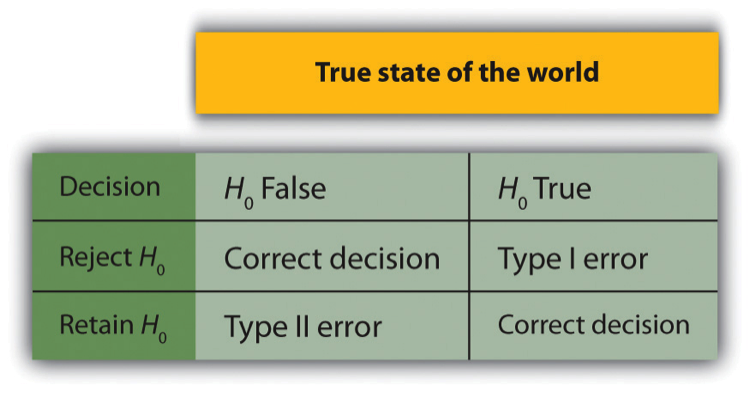

Null hypothesis testing (or NHST) is a formal approach to making decisions between these two interpretations. One interpretation is called the null hypothesis (often symbolized \(H_0\) and read as “H-naught”). This is the idea that there is no relationship in the population and that the relationship in the sample reflects only sampling error. Informally, the null hypothesis is that the sample relationship “occurred by chance”. The other interpretation is called the alternative hypothesis (often symbolized as \(H_1\) ). This is the idea that there is a relationship in the population and that the relationship in the sample reflects this relationship in the population.

Again, every statistical relationship in a sample can be interpreted in either of these two ways: It might have occurred by chance, or it might reflect a relationship in the population. So researchers need a way to decide between them. Although there are many specific null hypothesis testing techniques, they are all based on the same general logic. The steps are as follows:

Assume for the moment that the null hypothesis is true. There is no relationship between the variables in the population.

Determine how likely the sample relationship would be if the null hypothesis were true.

If the sample relationship would be extremely unlikely, then reject the null hypothesis in favor of the alternative hypothesis. If it would not be extremely unlikely, then retain the null hypothesis.

Following this logic, we can begin to understand why Mehl and his colleagues concluded that there is no difference in talkativeness between women and men in the population. In essence, they asked the following question: “If there were no difference in the population, how likely is it that we would find a small difference of d = 0.06 in our sample?” Their answer to this question was that this sample relationship would be fairly likely if the null hypothesis were true. Therefore, they retained the null hypothesis—concluding that there is no evidence of a sex difference in the population. We can also see why Kanner and his colleagues concluded that there is a correlation between hassles and symptoms in the population. They asked, “If the null hypothesis were true, how likely is it that we would find a strong correlation of +.60 in our sample?” Their answer to this question was that this sample relationship would be fairly unlikely if the null hypothesis were true. Therefore, they rejected the null hypothesis in favor of the alternative hypothesis—concluding that there is a positive correlation between these variables in the population.

A crucial step in null hypothesis testing is finding the likelihood of the sample result if the null hypothesis were true. This probability is called the p value. A small value of p value means that the sample result would be unlikely if the null hypothesis were true and leads to the rejection of the null hypothesis. A large value of p means that the sample result would be likely if the null hypothesis were true and leads the null hypothesis to be accepted. But how low must the p value be before the sample result is considered unlikely enough to reject the null hypothesis? In null hypothesis testing, this criterion is called \(\alpha\) (alpha) and is often set to .05. If the chance of a result as extreme as the sample result (or more extreme) is less than a 5% if the null hypothesis were true, then the null hypothesis is rejected. When this happens, the result is said to be statistically significant. If the chance of of a result as extreme as the sample result is greater than 5% when the null hypothesis is true, then the null hypothesis is retained. This does not necessarily mean that the researcher accepts the null hypothesis as true. It means that there is not currently enough evidence to conclude that it is false. For this reason, researchers often use the expression “fail to reject the null hypothesis” rather than something such as “conclude the null hypothesis is true”.

14.1.4. The Misunderstood p Value ¶

The p value is one of the most misunderstood quantities in psychological research [Coh94] . Even professional researchers misinterpret it, and it is not unusual for such misinterpretations to appear in statistics textbooks!

The most common misinterpretation is that the p value is the probability that the null hypothesis is true or that the that the p value is the probability that the sample result occurred by chance. For example, a misguided researcher might say that because the p value is .02, there is only a 2% chance that the result is due to chance and a 98% chance that it reflects a real relationship in the population. But this is incorrect. The p value is really the probability of a result at least as extreme as the sample result if the null hypothesis were true. So a p value of .02 means that if the null hypothesis were true, a sample result this extreme would occur only 2% of the time.

You can avoid this misunderstanding by remembering that the p value is not the probability that any particular hypothesis is true or false. Instead, it is the probability of obtaining the sample result if the null hypothesis were true.

14.1.5. Role of Sample Size and Relationship Strength ¶

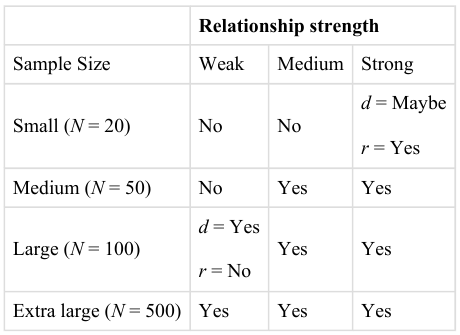

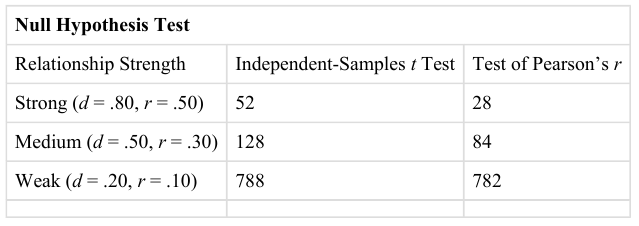

Recall that null hypothesis testing involves answering the question, “If the null hypothesis were true, what is the probability of a sample result as extreme as this one?” As we have just seen, this question is equivalent to, “What is the p value?” It can be helpful to see that the answer to this question depends on just two considerations: the strength of the relationship and the size of the sample. Specifically, the stronger the sample relationship and the larger the sample, the less likely the result would be if the null hypothesis were true. That is, the lower the p value. This should make sense. Imagine a study in which a sample of 500 women is compared with a sample of 500 men in terms of some psychological characteristic, and Cohen’s d is a strong 0.50. If there were really no sex difference in the population, then a result this strong based on such a large sample should seem highly unlikely. Now imagine a similar study in which a sample of three women is compared with a sample of three men, and Cohen’s d is a weak 0.10. If there were no sex difference in the population, then a relationship this weak based on such a small sample should seem likely. And this is precisely why the null hypothesis would be rejected in the first example and retained in the second.

Of course, sometimes the result can be weak and the sample large, or the result can be strong and the sample small. In these cases, the two considerations trade off against each other so that a weak result can be statistically significant if the sample is large enough and a strong relationship can be statistically significant even if the sample is small. Figure 14.1: shows a rough guideline of how relationship strength and sample size might combine to determine whether a sample result is statistically significant or not. The columns of the table represent the three levels of relationship strength: weak, medium, and strong. The rows represent four sample sizes that can be considered small, medium, large, and extra large in the context of psychological research. Thus, each cell in the table represents a combination of relationship strength and sample size. If a cell contains the word Yes, then this combination would be statistically significant for both Cohen’s d and Pearson’s r. If it contains the word No, then it would not be statistically significant for either. There is one cell where the decision for d and r would be different and another where it might be different depending on some additional considerations, which are discussed below, in the section entitled, “Some Basic Null Hypothesis Tests”.

Fig. 14.1 How Relationship Strength and Sample Size Combine to Determine Whether a Result Is Statistically Significant ¶

Although Figure 14.1: provides only a rough guideline, it shows very clearly that weak relationships based on medium or small samples are less likely to be statistically significant and that strong relationships based on medium or larger samples are more likely to be statistically significant. If you keep this lesson in mind, you will often know whether a result is statistically significant based on the descriptive statistics alone. It is extremely useful to be able to develop this kind of intuitive judgment. One reason is that it allows you to develop expectations about how your formal null hypothesis tests are going to come out, which in turn allows you to detect problems in your analyses. For example, if your sample relationship is strong and your sample is medium, then you would expect to reject the null hypothesis. If for some reason your formal null hypothesis test indicates otherwise, then you may need to double-check your computations and interpretations. A second reason is that the ability to make this kind of intuitive judgment is an indication that you understand the basic logic of this approach.

14.1.6. Statistical Significance Versus Practical Significance ¶

Figure 14.1: illustrates another extremely important point. A statistically significant result is not necessarily a strong one. Even a very weak result can be statistically significant if it is based on a large enough sample. This is closely related to Janet Shibley Hyde’s argument about sex differences [Hyd07] . The differences between women and men in mathematical problem solving and leadership ability are statistically significant. But the word “significant” can cause people to interpret these differences as strong and important, perhaps even important enough to influence the college courses they take or even who they vote for. As we have seen, however, these statistically significant differences are actually quite weak, perhaps even “trivial”.

This is why it is important to distinguish between the statistical significance of a result and the practical significance of that result. Practical significance refers to the importance or usefulness of the result in some real-world context. Many sex differences are statistically significant (and may even be interesting for purely scientific reasons) but they are often not practically significant. In clinical practice, this same concept is often referred to as “clinical significance”. For example, a study on a new treatment for social phobia might show that it produces a positive effect that is statistically significant. Yet this effect still might not be strong enough to justify the time, effort, and other costs of putting it into practice. For example, easier and cheaper treatments that work almost as well might already exist. Although statistically significant, this result would be said to lack practical or clinical significance.

14.1.7. Key Takeaways ¶

Null hypothesis testing is a formal approach to deciding whether a statistical relationship in a sample reflects a real relationship in the population or is just due to chance.

The logic of null hypothesis testing involves assuming that the null hypothesis is true, finding how likely the sample result would be if this assumption were correct, and then making a decision. If the sample result would be unlikely if the null hypothesis were true, then it is rejected in favor of the alternative hypothesis. If it would not be unlikely, then the null hypothesis is retained.

The probability of obtaining the sample result if the null hypothesis were true (the p value) is based on two considerations: relationship strength and sample size. Reasonable judgments about whether a sample relationship is statistically significant can often be made by quickly considering these two factors.

Statistical significance is not the same as relationship strength or importance. Even weak relationships can be statistically significant if the sample size is large enough. It is important to consider relationship strength and the practical significance of a result in addition to its statistical significance.

14.1.8. Exercises ¶

Discussion: Imagine a study showing that people who eat more broccoli tend to be happier. Explain for someone who knows nothing about statistics why the researchers would conduct a null hypothesis test.

Practice: Use Figure 14.1: to try and determine whether each of the following results is statistically significant:

a. The correlation between two variables is r = -0.78 based on a sample size of 137.

b. The mean score on a psychological characteristic for women is 25 (SD = 5) and the mean score for men is 24 (SD = 5). There were 12 women and 10 men in this study.

c. In a memory experiment, the mean number of items recalled by the 40 participants in Condition A was 0.50 standard deviations greater than the mean number recalled by the 40 participants in Condition B.

d. In another memory experiment, the mean scores for participants in Condition A and Condition B came out exactly the same!

e. A student finds a correlation of r = 0.04 between the number of units the students in his research methods class are taking and the students’ level of stress.

14.2. Some Basic Null Hypothesis Tests ¶

14.2.1. learning objectives ¶.

Conduct and interpret one-sample, dependent-samples, and independent-samples t tests.

Interpret the results of one-way, repeated measures, and factorial ANOVAs.

Conduct and interpret null hypothesis tests of Pearson’s r.

In this section, we look at several common null hypothesis testing procedures. The emphasis here is on providing enough information to allow you to conduct and interpret the most basic versions. In most cases, the online statistical analysis tools mentioned in Chapter 13 will handle the computations, as will programs such as Microsoft Excel and SPSS.

14.2.2. The t Test ¶

As we have seen throughout this book, many studies in psychology focus on the difference between two means. The most common null hypothesis test for this type of statistical relationship is the t test. In this section, we look at three types of t tests that are used for slightly different research designs: the one-sample t test, the dependent-samples t test, and the independent-samples t test.

14.2.3. One-Sample t Test ¶

The one-sample t test is used to compare a sample mean (M) with a hypothetical population mean ( \(\mu_0\) ) that provides some interesting standard of comparison. The null hypothesis is that the mean for the population ( \(\mu\) ) is equal to the hypothetical population mean: \(\mu\) = \(\mu_0\) . The alternative hypothesis is that the mean for the population is different from the hypothetical population mean: \(\mu \neq \mu_0\) . To decide between these two hypotheses, we need to find the probability of obtaining the sample mean (or one more extreme) if the null hypothesis were true. But finding this p value requires first computing a test statistic called t. A test statistic is a statistic that is computed only to help find the p value. The formula for t is as follows:

Again, M is the sample mean and \(\mu_0\) is the hypothetical population mean of interest. SD is the sample standard deviation and N is the sample size.

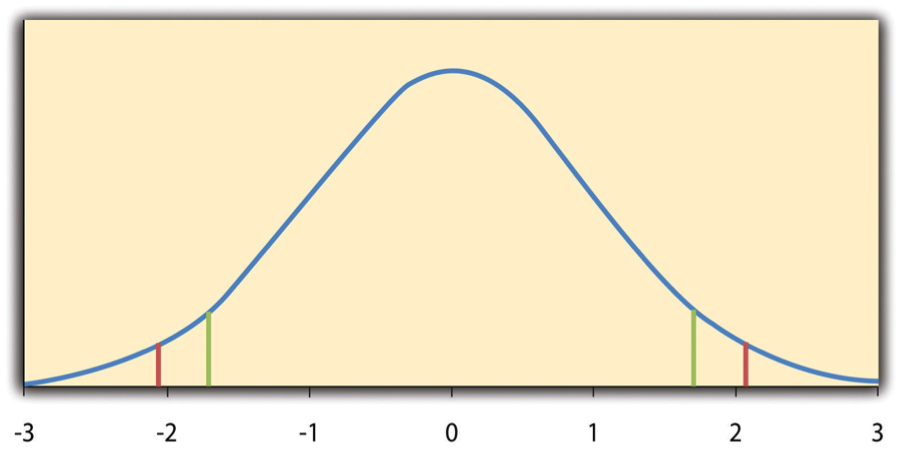

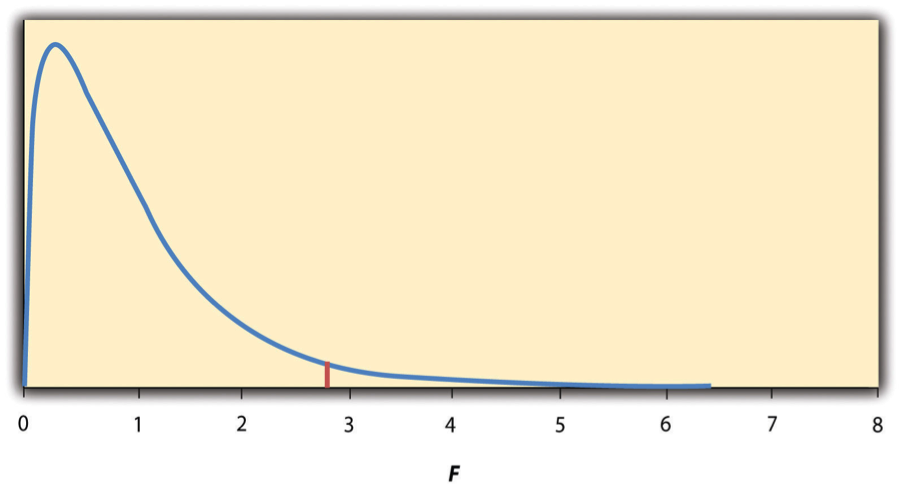

The reason the t statistic (or any test statistic) is useful is that we know how it is distributed when the null hypothesis is true. As shown in Figure 14.2: , this distribution is unimodal and symmetrical, and it has a mean of 0. Its precise shape depends on a statistical concept called the degrees of freedom, which for a one-sample t test is N - 1 (there are 24 degrees of freedom for the distribution shown in Figure 14.2: ). The important point is that knowing this distribution makes it possible to find the p value for any t score. Consider, for example, a t score of +1.50 based on a sample of 25. The probability of a t score at least this extreme is given by the proportion of t scores in the distribution that are at least this extreme. For now, let us define extreme as being far from zero in either direction. Thus the p value is the proportion of t scores that are +1.50 or above or that are -1.50 or below, a value that turns out to be .14.

Fig. 14.2 Distribution of t Scores (with 24 Degrees of Freedom) when the null hypothesis is true. The red vertical lines represent the two-tailed critical values, and the green vertical lines the one-tailed critical values when \(\alpha\) = .05. ¶

Fortunately, we do not have to deal directly with the distribution of t scores. If we were to enter our sample data and hypothetical mean of interest into one of the online statistical tools in Chapter 13 or into a program like SPSS, the output would include both the t score and the p value. At this point, the rest of the procedure is simple. If p is less than .05, we reject the null hypothesis and conclude that the population mean differs from the hypothetical mean of interest. If p is greater than .05, we conclude that there is not enough evidence to say that the population mean differs from the hypothetical mean of interest.

If we were to compute the t score by hand, we could use a table below to make the decision. This table does not provide actual p values. Instead, it provides the critical values of t for different degrees of freedom (df) when \(\alpha\) is .05. For now, let us focus on the two-tailed critical values in the last column of the table. Each of these values should be interpreted as a pair of values: one positive and one negative. For example, the two-tailed critical values when there are 24 degrees of freedom are +2.064 and -2.064. These are represented by the red vertical lines in Figure 14.2: . The idea is that any t score below the lower critical value (the left-hand red line in Figure Figure 14.2: ) is in the lowest 2.5% of the distribution, while any t score above the upper critical value (the right-hand red line) is in the highest 2.5% of the distribution. Therefore any t score beyond the critical value in either direction is in the most extreme 5% of t scores when the null hypothesis is true and has a p value less than .05. Thus if the t score we compute is beyond the critical value in either direction, then we reject the null hypothesis. If the t score we compute is between the upper and lower critical values, then we retain the null hypothesis.

|

|

|

|---|---|---|

3 | 2.353 | 3.182 |

4 | 2.132 | 2.776 |

5 | 2.015 | 2.571 |

6 | 1.943 | 2.447 |

7 | 1.895 | 2.365 |

8 | 1.860 | 2.306 |

9 | 1.833 | 2.262 |

10 | 1.812 | 2.228 |

11 | 1.796 | 2.201 |

12 | 1.782 | 2.179 |

13 | 1.771 | 2.160 |

14 | 1.761 | 2.145 |

15 | 1.753 | 2.131 |

16 | 1.746 | 2.120 |

17 | 1.740 | 2.110 |

18 | 1.734 | 2.101 |

19 | 1.729 | 2.093 |

20 | 1.725 | 2.086 |

21 | 1.721 | 2.080 |

22 | 1.717 | 2.074 |

23 | 1.714 | 2.069 |

24 | 1.711 | 2.064 |

25 | 1.708 | 2.060 |

30 | 1.697 | 2.042 |

35 | 1.690 | 2.030 |

40 | 1.684 | 2.021 |

45 | 1.679 | 2.014 |

50 | 1.676 | 2.009 |

60 | 1.671 | 2.000 |

70 | 1.667 | 1.994 |

80 | 1.664 | 1.990 |

90 | 1.662 | 1.987 |

100 | 1.660 | 1.984 |

Thus far, we have considered what is called a two-tailed test, where we reject the null hypothesis if the t score for the sample is extreme in either direction. This test makes sense when we believe that the sample mean might differ from the hypothetical population mean but we do not have good reason to expect the difference to go in a particular direction. But it is also possible to do a one-tailed test, where we reject the null hypothesis only if the t score for the sample is extreme in one direction that we specify before collecting the data. This test makes sense when we have good reason to expect the sample mean will differ from the hypothetical population mean in a particular direction.

Here is how it works. Each one-tailed critical value in the table above can again be interpreted as a pair of values: one positive and one negative. A t score below the lower critical value is in the lowest 5% of the distribution, and a t score above the upper critical value is in the highest 5% of the distribution. For 24 degrees of freedom, these values are -1.711 and +1.711 (these are represented by the green vertical lines in Figure 14.2: ). However, for a one-tailed test, we must decide before collecting data whether we expect the sample mean to be lower than the hypothetical population mean, in which case we would use only the lower critical value, or we expect the sample mean to be greater than the hypothetical population mean, in which case we would use only the upper critical value. Notice that we still reject the null hypothesis when the t score for our sample is in the most extreme 5% of the t scores we would expect if the null hypothesis were true, keeping \(\alpha\) at .05. We have simply redefined “extreme” to refer only to one tail of the distribution. The advantage of the one-tailed test is that critical values in that tail are less extreme. If the sample mean differs from the hypothetical population mean in the expected direction, then we have a better chance of rejecting the null hypothesis. The disadvantage is that if the sample mean differs from the hypothetical population mean in the unexpected direction, then there is no chance of rejecting the null hypothesis.

14.2.4. Example One-Sample t Test ¶

Imagine that a health psychologist is interested in the accuracy of university students’ estimates of the number of calories in a chocolate chip cookie. He shows the cookie to a sample of 10 students and asks each one to estimate the number of calories in it. Because the actual number of calories in the cookie is 250, this is the hypothetical population mean of interest ( \(\mu_0\) ). The null hypothesis is that the mean estimate for the population ( \(\mu\) ) is 250. Because he has no real sense of whether the students will underestimate or overestimate the number of calories, he decides to do a two-tailed test. Now imagine further that the participants’ actual estimates are as follows:

250, 280, 200, 150, 175, 200, 200, 220, 180, 250

The mean estimate for the sample (M) is 212.00 calories and the standard deviation (SD) is 39.17. The health psychologist can now compute the t score for his sample:

If he enters the data into one of the online analysis tools or uses SPSS, it would also tell him that the two- tailed p value for this t score (with 10 - 1 = 9 degrees of freedom) is .013. Because this is less than .05, the health psychologist would reject the null hypothesis and conclude that university students tend to underestimate the number of calories in a chocolate chip cookie. If he computes the t score by hand, he could look at Table 13.2 and see that the critical value of t for a two-tailed test with 9 degrees of freedom is ±2.262. The fact that his t score was more extreme than this critical value would tell him that his p value is less than .05 and that he should reject the null hypothesis.

Finally, if this researcher had gone into this study with good reason to expect that university students underestimate the number of calories, then he could have done a one-tailed test instead of a two-tailed test. The only thing this decision would change is the critical value, which would be -1.833. This slightly less extreme value would make it a bit easier to reject the null hypothesis. However, if it turned out that university students overestimate the number of calories the researcher would not have been able to reject the null hypothesis, no matter how much they overestimated it.

14.2.5. The Dependent-Samples t Test ¶

The dependent-samples t test (sometimes called the paired-samples t test) is used to compare two means (e.g., a group of participants measured at two different times or under two different conditions). This comparison is appropriate for pretest-posttest designs or within-subjects experiments. The null hypothesis is that the two means are the same in the population. The alternative hypothesis is that they are not the same. Like the one-sample t test, te dependent-samples t test can be one-tailed if the researcher has good reason to expect the difference goes in a particular direction.

It helps to think of the dependent-samples t test as a special case of the one-sample t test. However, the first step in the dependent-samples t test is to reduce the two scores for each participant to a single measurement by taking the difference between them. At this point, the dependent-samples t test becomes a one-sample t test on the difference scores. The hypothetical population mean ( \(\mu_0\) ) of interest is 0 because this is what the mean difference score would be if there were no difference between the two means. We can now think of the null hypothesis as being that the mean difference score in the population is 0 ( \(\mu_0\) = 0) and the alternative hypothesis as being that the mean difference score in the population is not 0 ( \(\mu_0 \neq 0\) ).

14.2.6. Example Dependent-Samples t Test ¶

Imagine that the health psychologist now knows that people tend to underestimate the number of calories in junk food and has developed a short training program to improve their estimates. To test the effectiveness of this program, he conducts a pretest-posttest study in which 10 participants estimate the number of calories in a chocolate chip cookie before the training program and then estimate the calories again afterward. Because he expects the program to increase participants’ estimates, he decides to conduct a one-tailed test. Now imagine further that the pretest estimates are:

230, 250, 280, 175, 150, 200, 180, 210, 220, 190

and that the posttest estimates (for the same participants in the same order) are:

250, 260, 250, 200, 160, 200, 200, 180, 230, 240.

The difference scores, then, are as follows:

+20, +10, -30, +25, +10, 0, +20, -30, +10, +50.

Note that it does not matter whether the first set of scores is subtracted from the second or the second from the first as long as it is done the same way for all participants. In this example, it makes sense to subtract the pretest estimates from the posttest estimates so that positive difference scores mean that the estimates went up after the training and negative difference scores mean the estimates went down.

The mean of the difference scores is 8.50 with a standard deviation of 27.27. The health psychologist can now compute the t score for his sample as follows:

If he enters the data into one of the online analysis tools or uses Excel or SPSS, it would tell him that the one-tailed p value for this t score (again with 10 - 1 = 9 degrees of freedom) is .148. Because this is greater than .05, he would fail to reject the null hypothesis; he does not have enough evidence to suggest that the training program increases calorie estimates. If he were to compute the t score by hand, he could look at the table above and see that the critical value of t for a one-tailed test with 9 degrees of freedom is +1.833 (positive because he was expecting a positive mean difference score). The fact that his t score was less extreme than this critical value would tell him that his p value is greater than .05 and that the results fail to reject the null hypothesis.

14.2.7. The Independent-Samples t Test ¶

The independent-samples t test is used to compare the means of two separate samples (M1 and M2). The two samples might have been tested under different conditions in a between-subjects experiment, or they could be preexisting groups in a correlational design (e.g., women and men or extraverts and introverts). The null hypothesis is that the two means: \(\mu_1 = \) \mu_2 \(. The alternative hypothesis is that they are not the same: \) \mu_1 \neq \mu_2$. Again, the test can be one-tailed if the researcher has good reason to expect the difference goes in a particular direction.

The t statistic here is a bit more complicated because it must take into account two sample means, two standard deviations, and two sample sizes. The formula is as follows:

Notice that this formula includes squared standard deviations (the variances) that appear inside the square root symbol. Also, lowercase n1 and n2 refer to the sample sizes in the two groups or condition (as opposed to capital N, which generally refers to the total sample size). The only additional thing to know here is that there are N - 2 degrees of freedom for the independent-samples t test.

14.2.8. Example Independent-Samples t test ¶

Now the health psychologist wants to compare the calorie estimates of people who regularly eat junk food with the estimates of people who rarely eat junk food. He believes the difference could come out in either direction so he decides to conduct a two-tailed test. He collects data from a sample of eight participants who eat junk food regularly and seven participants who rarely eat junk food. The data are as follows:

Junk food eaters: 180, 220, 150, 85, 200, 170, 150, 190

Non–junk food eaters: 200, 240, 190, 175, 200, 300, 240

The mean for the junk food eaters is 220.71 with a standard deviation of 41.23. The mean for the non–junk food eaters is 168.12 with a standard deviation of 42.66. He can now compute his t score as follows:

If he enters the data into one of the online analysis tools or uses Excel or SPSS, it would tell him that the two-tailed p value for this t score (with 15 - 2 = 13 degrees of freedom) is .015. Because this p value is less than .05, the health psychologist would reject the null hypothesis and conclude that people who eat junk food regularly make lower calorie estimates than people who eat it rarely. If he were to compute the t score by hand, he could look at the table above and see that the critical value of t for a two-tailed test with 13 degrees of freedom is 2.160 (and/or -2.160). The fact that the observed t score was more extreme than this critical value would tell him that his p value is less than .05 and that he should reject the null hypothesis.

14.2.9. The Analysis of Variance ¶

When there are more than two groups or condition means to be compared, the most common null hypothesis test is the analysis of variance (ANOVA). In this section, we look primarily at the one-way ANOVA, which is used for between-subjects designs with a single independent variable. We then briefly consider some other versions of the ANOVA that are used for within-subjects and factorial research designs.

14.2.10. One-Way ANOVA ¶

The one-way ANOVA is used to compare the means of more than two samples ( \(M_1, M_2, \ldots M_G\) ) in a between-subjects design. The null hypothesis is that all the means are equal in the population: \(\mu_1=\mu_2= \ldots = \mu_G\) . The alternative hypothesis is that not all the means in the population are equal.

The test statistic for the ANOVA is called F. It is a ratio of two estimates of the population variance based on the sample data. One estimate of the population variance is called the mean squares between groups (MSB) and is based on the differences among the sample means. The other is called the mean squares within groups (MSW) and is based on the differences among the scores within each group. The F statistic is the ratio of the \(MS_B\) to the \(MS_W\) and can therefore be expressed as follows: